Compare commits

5 Commits

feat/list-

...

feat/pushj

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

a8e2a835cc | ||

|

|

b194ffc504 | ||

|

|

d2ca782a3d | ||

|

|

f61e7d06b0 | ||

|

|

e84f43fca1 |

2

.github/actions/triage/action.yml

vendored

2

.github/actions/triage/action.yml

vendored

@@ -26,7 +26,7 @@ runs:

|

||||

steps:

|

||||

- name: Checkout code

|

||||

if: ${{ github.event_name != 'pull_request' }}

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

- name: Run action

|

||||

run: node ${{ github.action_path }}/dist/index.js

|

||||

shell: sh

|

||||

|

||||

4

.github/workflows/audit-dependencies.yml

vendored

4

.github/workflows/audit-dependencies.yml

vendored

@@ -24,7 +24,7 @@ jobs:

|

||||

runs-on: ubuntu-24.04

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

- name: Setup

|

||||

uses: ./.github/actions/setup

|

||||

|

||||

@@ -34,7 +34,7 @@ jobs:

|

||||

|

||||

- name: Slack notification on failure

|

||||

if: failure()

|

||||

uses: slackapi/slack-github-action@v2.1.1

|

||||

uses: slackapi/slack-github-action@v2.1.0

|

||||

with:

|

||||

webhook: ${{ inputs.debug == 'true' && secrets.SLACK_TEST_WEBHOOK_URL || secrets.SLACK_WEBHOOK_URL }}

|

||||

webhook-type: incoming-webhook

|

||||

|

||||

2

.github/workflows/dispatch-event.yml

vendored

2

.github/workflows/dispatch-event.yml

vendored

@@ -11,7 +11,7 @@ jobs:

|

||||

name: Repository dispatch

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Dispatch event

|

||||

if: ${{ github.event_name == 'workflow_dispatch' }}

|

||||

|

||||

24

.github/workflows/main.yml

vendored

24

.github/workflows/main.yml

vendored

@@ -6,6 +6,7 @@ on:

|

||||

- opened

|

||||

- reopened

|

||||

- synchronize

|

||||

- labeled

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

@@ -33,7 +34,7 @@ jobs:

|

||||

- name: tune linux network

|

||||

run: sudo ethtool -K eth0 tx off rx off

|

||||

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

- uses: dorny/paths-filter@v3

|

||||

id: filter

|

||||

with:

|

||||

@@ -62,7 +63,7 @@ jobs:

|

||||

lint:

|

||||

runs-on: ubuntu-24.04

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

@@ -78,7 +79,7 @@ jobs:

|

||||

runs-on: ubuntu-24.04

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -98,7 +99,7 @@ jobs:

|

||||

needs: [changes, build]

|

||||

if: ${{ needs.changes.outputs.needs_tests == 'true' }}

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -122,7 +123,7 @@ jobs:

|

||||

needs: [changes, build]

|

||||

if: ${{ needs.changes.outputs.needs_tests == 'true' }}

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -184,7 +185,7 @@ jobs:

|

||||

options: --health-cmd pg_isready --health-interval 10s --health-timeout 5s --health-retries 5

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -309,7 +310,7 @@ jobs:

|

||||

env:

|

||||

SUITE_NAME: ${{ matrix.suite }}

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -370,7 +371,6 @@ jobs:

|

||||

# report-tag: ${{ matrix.suite }}

|

||||

# job-summary: true

|

||||

|

||||

# This is unused, keeping it here for reference and possibly enabling in the future

|

||||

tests-e2e-turbo:

|

||||

runs-on: ubuntu-24.04

|

||||

needs: [changes, build]

|

||||

@@ -447,7 +447,7 @@ jobs:

|

||||

env:

|

||||

SUITE_NAME: ${{ matrix.suite }}

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -550,7 +550,7 @@ jobs:

|

||||

MONGODB_VERSION: 6.0

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -647,7 +647,7 @@ jobs:

|

||||

needs: [changes, build]

|

||||

if: ${{ needs.changes.outputs.needs_tests == 'true' }}

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

@@ -706,7 +706,7 @@ jobs:

|

||||

actions: read # for fetching base branch bundle stats

|

||||

pull-requests: write # for comments

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Node setup

|

||||

uses: ./.github/actions/setup

|

||||

|

||||

4

.github/workflows/post-release-templates.yml

vendored

4

.github/workflows/post-release-templates.yml

vendored

@@ -17,7 +17,7 @@ jobs:

|

||||

release_tag: ${{ steps.determine_tag.outputs.release_tag }}

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

sparse-checkout: .github/workflows

|

||||

@@ -54,7 +54,7 @@ jobs:

|

||||

POSTGRES_DB: payloadtests

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Setup

|

||||

uses: ./.github/actions/setup

|

||||

|

||||

6

.github/workflows/post-release.yml

vendored

6

.github/workflows/post-release.yml

vendored

@@ -23,7 +23,7 @@ jobs:

|

||||

runs-on: ubuntu-24.04

|

||||

if: ${{ github.event_name != 'workflow_dispatch' }}

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

- uses: ./.github/actions/release-commenter

|

||||

continue-on-error: true

|

||||

env:

|

||||

@@ -43,9 +43,9 @@ jobs:

|

||||

if: ${{ github.event_name != 'workflow_dispatch' }}

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

- name: Github Releases To Discord

|

||||

uses: SethCohen/github-releases-to-discord@v1.19.0

|

||||

uses: SethCohen/github-releases-to-discord@v1.16.2

|

||||

with:

|

||||

webhook_url: ${{ secrets.DISCORD_RELEASES_WEBHOOK_URL }}

|

||||

color: '16777215'

|

||||

|

||||

2

.github/workflows/pr-title.yml

vendored

2

.github/workflows/pr-title.yml

vendored

@@ -14,7 +14,7 @@ jobs:

|

||||

name: lint-pr-title

|

||||

runs-on: ubuntu-24.04

|

||||

steps:

|

||||

- uses: amannn/action-semantic-pull-request@v6

|

||||

- uses: amannn/action-semantic-pull-request@v5

|

||||

id: lint_pr_title

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

2

.github/workflows/publish-prerelease.yml

vendored

2

.github/workflows/publish-prerelease.yml

vendored

@@ -18,7 +18,7 @@ jobs:

|

||||

runs-on: ubuntu-24.04

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

- name: Setup

|

||||

uses: ./.github/actions/setup

|

||||

- name: Load npm token

|

||||

|

||||

2

.github/workflows/triage.yml

vendored

2

.github/workflows/triage.yml

vendored

@@ -90,7 +90,7 @@ jobs:

|

||||

if: github.event_name == 'issues'

|

||||

runs-on: ubuntu-24.04

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

ref: ${{ github.event.pull_request.base.ref }}

|

||||

token: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

@@ -331,7 +331,5 @@ test/databaseAdapter.js

|

||||

test/.localstack

|

||||

test/google-cloud-storage

|

||||

test/azurestoragedata/

|

||||

/media-without-delete-access

|

||||

|

||||

|

||||

licenses.csv

|

||||

|

||||

54

AGENTS.md

54

AGENTS.md

@@ -1,54 +0,0 @@

|

||||

# Payload Monorepo Agent Instructions

|

||||

|

||||

## Project Structure

|

||||

|

||||

- Packages are located in the `packages/` directory.

|

||||

- The main Payload package is `packages/payload`. This contains the core functionality.

|

||||

- Database adapters are in `packages/db-*`.

|

||||

- The UI package is `packages/ui`.

|

||||

- The Next.js integration is in `packages/next`.

|

||||

- Rich text editor packages are in `packages/richtext-*`.

|

||||

- Storage adapters are in `packages/storage-*`.

|

||||

- Email adapters are in `packages/email-*`.

|

||||

- Plugins which add additional functionality are in `packages/plugin-*`.

|

||||

- Documentation is in the `docs/` directory.

|

||||

- Monorepo tooling is in the `tools/` directory.

|

||||

- Test suites and configs are in the `test/` directory.

|

||||

- LLMS.txt is at URL: https://payloadcms.com/llms.txt

|

||||

- LLMS-FULL.txt is at URL: https://payloadcms.com/llms-full.txt

|

||||

|

||||

## Dev environment tips

|

||||

|

||||

- Any package can be built using a `pnpm build:*` script defined in the root `package.json`. These typically follow the format `pnpm build:<directory_name>`. The options are all of the top-level directories inside the `packages/` directory. Ex `pnpm build:db-mongodb` which builds the `packages/db-mongodb` package.

|

||||

- ALL packages can be built with `pnpm build:all`.

|

||||

- Use `pnpm dev` to start the monorepo dev server. This loads the default config located at `test/_community/config.ts`.

|

||||

- Specific dev configs for each package can be run with `pnpm dev <directory_name>`. The options are all of the top-level directories inside the `test/` directory. Ex `pnpm dev fields` which loads the `test/fields/config.ts` config. The directory name can either encompass a single area of functionality or be the name of a specific package.

|

||||

|

||||

## Testing instructions

|

||||

|

||||

- There are unit, integration, and e2e tests in the monorepo.

|

||||

- Unit tests can be run with `pnpm test:unit`.

|

||||

- Integration tests can be run with `pnpm test:int`. Individual test suites can be run with `pnpm test:int <directory_name>`, which will point at `test/<directory_name>/int.spec.ts`.

|

||||

- E2E tests can be run with `pnpm test:e2e`.

|

||||

- All tests can be run with `pnpm test`.

|

||||

- Prefer running `pnpm test:int` for verifying local code changes.

|

||||

|

||||

## PR Guidelines

|

||||

|

||||

- This repository follows conventional commits for PR titles

|

||||

- PR Title format: <type>(<scope>): <title>. Title must start with a lowercase letter.

|

||||

- Valid types are build, chore, ci, docs, examples, feat, fix, perf, refactor, revert, style, templates, test

|

||||

- Prefer `feat` for new features and `fix` for bug fixes.

|

||||

- Valid scopes are the following regex patterns: cpa, db-\*, db-mongodb, db-postgres, db-vercel-postgres, db-sqlite, drizzle, email-\*, email-nodemailer, email-resend, eslint, graphql, live-preview, live-preview-react, next, payload-cloud, plugin-cloud, plugin-cloud-storage, plugin-form-builder, plugin-import-export, plugin-multi-tenant, plugin-nested-docs, plugin-redirects, plugin-search, plugin-sentry, plugin-seo, plugin-stripe, richtext-\*, richtext-lexical, richtext-slate, storage-\*, storage-azure, storage-gcs, storage-uploadthing, storage-vercel-blob, storage-s3, translations, ui, templates, examples(\/(\w|-)+)?, deps

|

||||

- Scopes should be chosen based upon the package(s) being modified. If multiple packages are being modified, choose the most relevant one or no scope at all.

|

||||

- Example PR titles:

|

||||

- `feat(db-mongodb): add support for transactions`

|

||||

- `feat(richtext-lexical): add options to hide block handles`

|

||||

- `fix(ui): json field type ignoring editorOptions`

|

||||

|

||||

## Commit Guidelines

|

||||

|

||||

- This repository follows conventional commits for commit messages

|

||||

- The first commit of a branch should follow the PR title format: <type>(<scope>): <title>. Follow the same rules as PR titles.

|

||||

- Subsequent commits should prefer `chore` commits without a scope unless a specific package is being modified.

|

||||

- These will eventually be squashed into the first commit when merging the PR.

|

||||

@@ -107,7 +107,6 @@ The following options are available:

|

||||

| `suppressHydrationWarning` | If set to `true`, suppresses React hydration mismatch warnings during the hydration of the root `<html>` tag. Defaults to `false`. |

|

||||

| `theme` | Restrict the Admin Panel theme to use only one of your choice. Default is `all`. |

|

||||

| `timezones` | Configure the timezone settings for the admin panel. [More details](#timezones) |

|

||||

| `toast` | Customize the handling of toast messages within the Admin Panel. [More details](#toasts) |

|

||||

| `user` | The `slug` of the Collection that you want to allow to login to the Admin Panel. [More details](#the-admin-user-collection). |

|

||||

|

||||

<Banner type="success">

|

||||

@@ -299,20 +298,3 @@ We validate the supported timezones array by checking the value against the list

|

||||

`timezone: true`. See [Date Fields](../fields/overview#date) for more

|

||||

information.

|

||||

</Banner>

|

||||

|

||||

## Toast

|

||||

|

||||

The `admin.toast` configuration allows you to customize the handling of toast messages within the Admin Panel, such as increasing the duration they are displayed and limiting the number of visible toasts at once.

|

||||

|

||||

<Banner type="info">

|

||||

**Note:** The Admin Panel currently uses the

|

||||

[Sonner](https://sonner.emilkowal.ski) library for toast notifications.

|

||||

</Banner>

|

||||

|

||||

The following options are available for the `admin.toast` configuration:

|

||||

|

||||

| Option | Description | Default |

|

||||

| ---------- | ---------------------------------------------------------------------------------------------------------------- | ------- |

|

||||

| `duration` | The length of time (in milliseconds) that a toast message is displayed. | `4000` |

|

||||

| `expand` | If `true`, will expand the message stack so that all messages are shown simultaneously without user interaction. | `false` |

|

||||

| `limit` | The maximum number of toasts that can be visible on the screen at once. | `5` |

|

||||

|

||||

@@ -33,7 +33,7 @@ export const Users: CollectionConfig = {

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

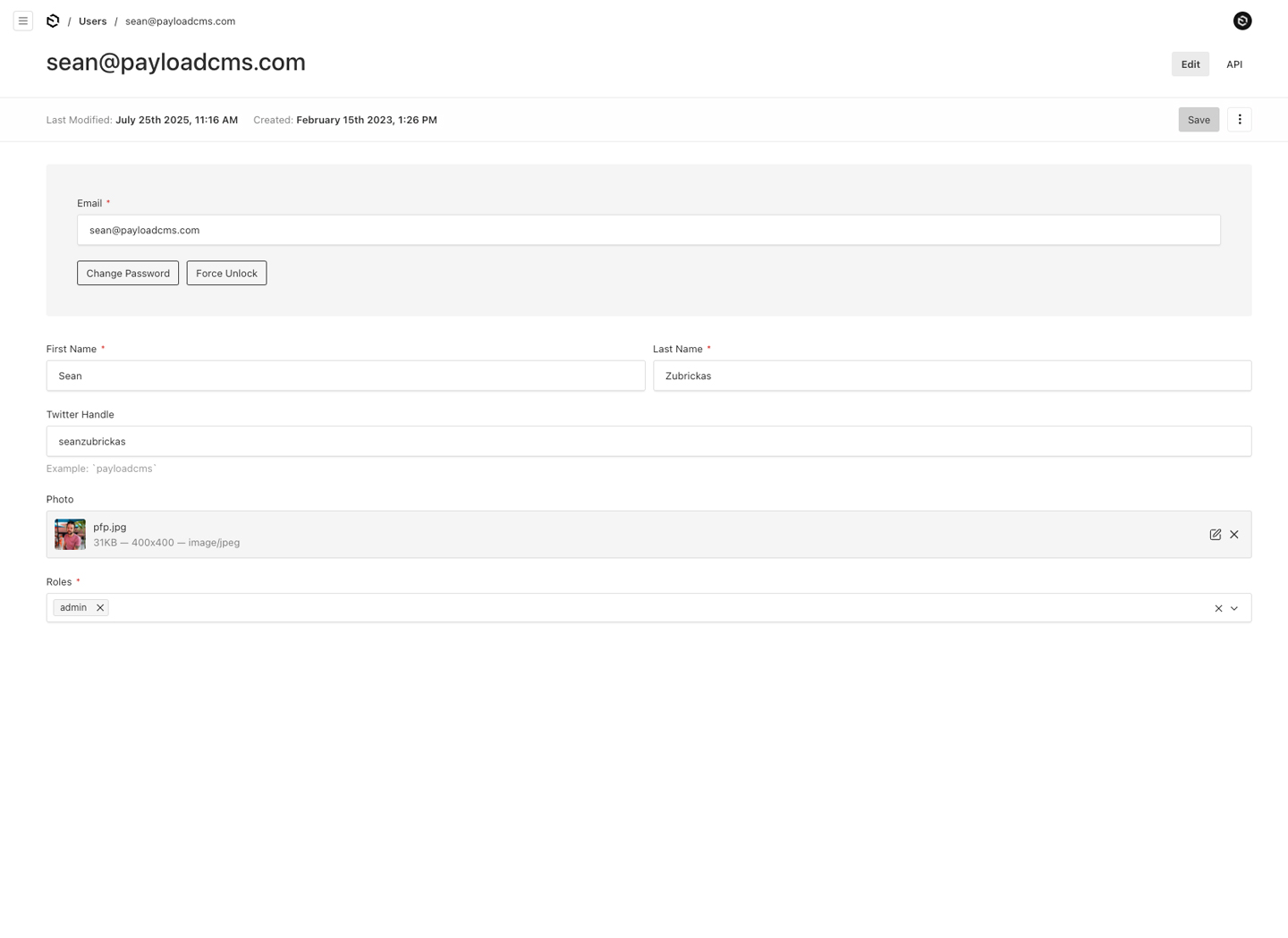

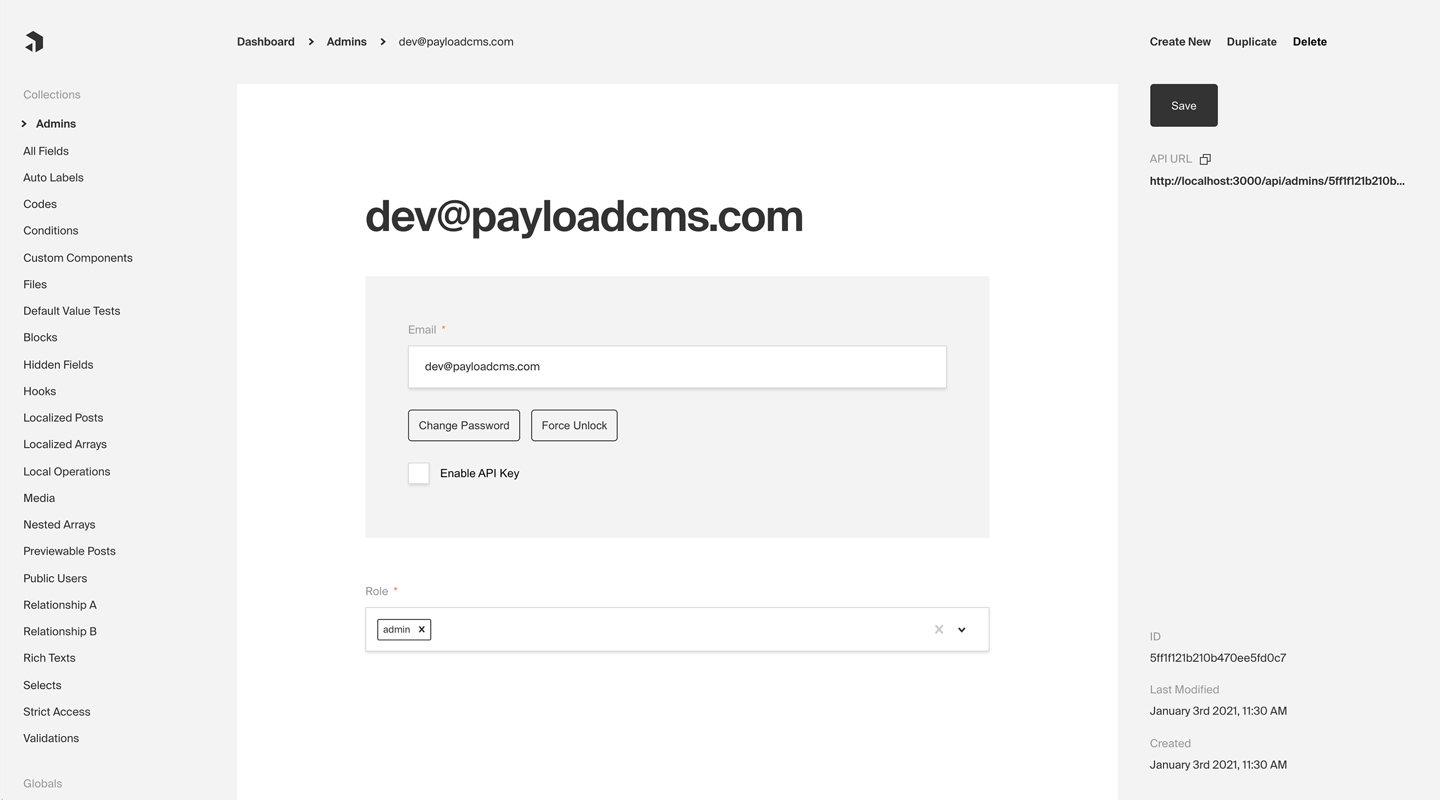

_Admin Panel screenshot depicting an Admins Collection with Auth enabled_

|

||||

|

||||

## Config Options

|

||||

|

||||

@@ -85,7 +85,7 @@ The following options are available:

|

||||

| `versions` | Set to true to enable default options, or configure with object properties. [More details](../versions/overview#collection-config). |

|

||||

| `defaultPopulate` | Specify which fields to select when this Collection is populated from another document. [More Details](../queries/select#defaultpopulate-collection-config-property). |

|

||||

| `indexes` | Define compound indexes for this collection. This can be used to either speed up querying/sorting by 2 or more fields at the same time or to ensure uniqueness between several fields. |

|

||||

| `forceSelect` | Specify which fields should be selected always, regardless of the `select` query which can be useful that the field exists for access control / hooks. [More details](../queries/select). |

|

||||

| `forceSelect` | Specify which fields should be selected always, regardless of the `select` query which can be useful that the field exists for access control / hooks |

|

||||

| `disableBulkEdit` | Disable the bulk edit operation for the collection in the admin panel and the REST API |

|

||||

|

||||

_\* An asterisk denotes that a property is required._

|

||||

@@ -141,8 +141,7 @@ The following options are available:

|

||||

| `livePreview` | Enable real-time editing for instant visual feedback of your front-end application. [More details](../live-preview/overview). |

|

||||

| `components` | Swap in your own React components to be used within this Collection. [More details](#custom-components). |

|

||||

| `listSearchableFields` | Specify which fields should be searched in the List search view. [More details](#list-searchable-fields). |

|

||||

| `enableListViewSelectAPI` | Performance opt-in. When `true`, uses the Select API in the List View to query only the active columns as opposed to entire documents. [More details](#enable-list-view-select-api). |

|

||||

| `pagination` | Set pagination-specific options for this Collection in the List View. [More details](#pagination). |

|

||||

| `pagination` | Set pagination-specific options for this Collection. [More details](#pagination). |

|

||||

| `baseFilter` | Defines a default base filter which will be applied to the List View (along with any other filters applied by the user) and internal links in Lexical Editor, |

|

||||

|

||||

<Banner type="warning">

|

||||

@@ -273,66 +272,6 @@ export const Posts: CollectionConfig = {

|

||||

these fields so your admin queries can remain performant.

|

||||

</Banner>

|

||||

|

||||

## Enable List View Select API

|

||||

|

||||

When `true`, the List View will use the [Select API](../queries/select) to query only the _active_ columns as opposed to entire documents. This can greatly improve performance, especially for collections with large documents or many fields.

|

||||

|

||||

To enable this, set `enableListViewSelectAPI: true` in your Collection Config:

|

||||

|

||||

```ts

|

||||

import type { CollectionConfig } from 'payload'

|

||||

|

||||

export const Posts: CollectionConfig = {

|

||||

// ...

|

||||

admin: {

|

||||

// ...

|

||||

// highlight-start

|

||||

enableListViewSelectAPI: true,

|

||||

// highlight-end

|

||||

},

|

||||

}

|

||||

```

|

||||

|

||||

<Banner type="info">

|

||||

**Note:** The `enableListViewSelectAPI` property is labeled as experimental,

|

||||

as it will likely become the default behavior in v4 and be deprecated.

|

||||

</Banner>

|

||||

|

||||

Enabling this feature may cause unexpected behavior in some cases, however, such as when using hooks that rely on the full document data.

|

||||

|

||||

For example, if your component relies on a "title" field, this field will no longer be populated if the column is inactive:

|

||||

|

||||

```ts

|

||||

import type { CollectionConfig } from 'payload'

|

||||

|

||||

export const Posts: CollectionConfig = {

|

||||

// ...

|

||||

fields: [

|

||||

// ...

|

||||

{

|

||||

name: 'myField',

|

||||

type: 'text',

|

||||

hooks: {

|

||||

afterRead: [

|

||||

({ doc }) => doc.title, // The `title` field will no longer be populated by default, unless the column is active

|

||||

],

|

||||

},

|

||||

},

|

||||

],

|

||||

}

|

||||

```

|

||||

|

||||

To ensure title is always present, you will need to add that field to the [`forceSelect`](../queries/select) property in your Collection Config:

|

||||

|

||||

```ts

|

||||

export const Posts: CollectionConfig = {

|

||||

// ...

|

||||

forceSelect: {

|

||||

title: true,

|

||||

},

|

||||

}

|

||||

```

|

||||

|

||||

## GraphQL

|

||||

|

||||

You can completely disable GraphQL for this collection by passing `graphQL: false` to your collection config. This will completely disable all queries, mutations, and types from appearing in your GraphQL schema.

|

||||

|

||||

@@ -84,7 +84,7 @@ The following options are available:

|

||||

| `slug` \* | Unique, URL-friendly string that will act as an identifier for this Global. |

|

||||

| `typescript` | An object with property `interface` as the text used in schema generation. Auto-generated from slug if not defined. |

|

||||

| `versions` | Set to true to enable default options, or configure with object properties. [More details](../versions/overview#global-config). |

|

||||

| `forceSelect` | Specify which fields should be selected always, regardless of the `select` query which can be useful that the field exists for access control / hooks. [More details](../queries/select). |

|

||||

| `forceSelect` | Specify which fields should be selected always, regardless of the `select` query which can be useful that the field exists for access control / hooks |

|

||||

|

||||

_\* An asterisk denotes that a property is required._

|

||||

|

||||

|

||||

@@ -131,29 +131,6 @@ localization: {

|

||||

|

||||

Since the filtering happens at the root level of the application and its result is not calculated every time you navigate to a new page, you may want to call `router.refresh` in a custom component that watches when values that affect the result change. In the example above, you would want to do this when `supportedLocales` changes on the tenant document.

|

||||

|

||||

## Experimental Options

|

||||

|

||||

Experimental options are features that may not be fully stable and may change or be removed in future releases.

|

||||

|

||||

These options can be enabled in your Payload Config under the `experimental` key. You can set them like this:

|

||||

|

||||

```ts

|

||||

import { buildConfig } from 'payload'

|

||||

|

||||

export default buildConfig({

|

||||

// ...

|

||||

experimental: {

|

||||

localizeStatus: true,

|

||||

},

|

||||

})

|

||||

```

|

||||

|

||||

The following experimental options are available related to localization:

|

||||

|

||||

| Option | Description |

|

||||

| -------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| **`localizeStatus`** | **Boolean.** When `true`, shows document status per locale in the admin panel instead of always showing the latest overall status. Opt-in for backwards compatibility. Defaults to `false`. |

|

||||

|

||||

## Field Localization

|

||||

|

||||

Payload Localization works on a **field** level—not a document level. In addition to configuring the base Payload Config to support Localization, you need to specify each field that you would like to localize.

|

||||

|

||||

@@ -70,7 +70,6 @@ The following options are available:

|

||||

| **`admin`** | The configuration options for the Admin Panel, including Custom Components, Live Preview, etc. [More details](../admin/overview#admin-options). |

|

||||

| **`bin`** | Register custom bin scripts for Payload to execute. [More Details](#custom-bin-scripts). |

|

||||

| **`editor`** | The Rich Text Editor which will be used by `richText` fields. [More details](../rich-text/overview). |

|

||||

| **`experimental`** | Configure experimental features for Payload. These may be unstable and may change or be removed in future releases. [More details](../experimental). |

|

||||

| **`db`** \* | The Database Adapter which will be used by Payload. [More details](../database/overview). |

|

||||

| **`serverURL`** | A string used to define the absolute URL of your app. This includes the protocol, for example `https://example.com`. No paths allowed, only protocol, domain and (optionally) port. |

|

||||

| **`collections`** | An array of Collections for Payload to manage. [More details](./collections). |

|

||||

|

||||

@@ -158,7 +158,7 @@ export function MyCustomView(props: AdminViewServerProps) {

|

||||

|

||||

<Banner type="success">

|

||||

**Tip:** For consistent layout and navigation, you may want to wrap your

|

||||

Custom View with one of the built-in [Templates](./overview#templates).

|

||||

Custom View with one of the built-in [Template](./overview#templates).

|

||||

</Banner>

|

||||

|

||||

### View Templates

|

||||

|

||||

@@ -293,6 +293,7 @@ Here's an example of a custom `editMenuItems` component:

|

||||

|

||||

```tsx

|

||||

import React from 'react'

|

||||

import { PopupList } from '@payloadcms/ui'

|

||||

|

||||

import type { EditMenuItemsServerProps } from 'payload'

|

||||

|

||||

@@ -300,12 +301,12 @@ export const EditMenuItems = async (props: EditMenuItemsServerProps) => {

|

||||

const href = `/custom-action?id=${props.id}`

|

||||

|

||||

return (

|

||||

<>

|

||||

<a href={href}>Custom Edit Menu Item</a>

|

||||

<a href={href}>

|

||||

<PopupList.ButtonGroup>

|

||||

<PopupList.Button href={href}>Custom Edit Menu Item</PopupList.Button>

|

||||

<PopupList.Button href={href}>

|

||||

Another Custom Edit Menu Item - add as many as you need!

|

||||

</a>

|

||||

</>

|

||||

</PopupList.Button>

|

||||

</PopupList.ButtonGroup>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

@@ -63,22 +63,3 @@ export const MyCollection: CollectionConfig = {

|

||||

],

|

||||

}

|

||||

```

|

||||

## Localized fields and MongoDB indexes

|

||||

|

||||

When you set `index: true` or `unique: true` on a localized field, MongoDB creates one index **per locale path** (e.g., `slug.en`, `slug.da-dk`, etc.). With many locales and indexed fields, this can quickly approach MongoDB's per-collection index limit.

|

||||

|

||||

If you know you'll query specifically by a locale, index only those locale paths using the collection-level `indexes` option instead of setting `index: true` on the localized field. This approach gives you more control and helps avoid unnecessary indexes.

|

||||

|

||||

```ts

|

||||

import type { CollectionConfig } from 'payload'

|

||||

|

||||

export const Pages: CollectionConfig = {

|

||||

fields: [{ name: 'slug', type: 'text', localized: true }],

|

||||

indexes: [

|

||||

// Index English slug only (rather than all locales)

|

||||

{ fields: ['slug.en'] },

|

||||

// You could also make it unique:

|

||||

// { fields: ['slug.en'], unique: true },

|

||||

],

|

||||

}

|

||||

```

|

||||

|

||||

@@ -60,21 +60,21 @@ You can access Mongoose models as follows:

|

||||

|

||||

## Using other MongoDB implementations

|

||||

|

||||

You can import the `compatibilityOptions` object to get the recommended settings for other MongoDB implementations. Since these databases aren't officially supported by payload, you may still encounter issues even with these settings (please create an issue or PR if you believe these options should be updated):

|

||||

You can import the `compatabilityOptions` object to get the recommended settings for other MongoDB implementations. Since these databases aren't officially supported by payload, you may still encounter issues even with these settings (please create an issue or PR if you believe these options should be updated):

|

||||

|

||||

```ts

|

||||

import { mongooseAdapter, compatibilityOptions } from '@payloadcms/db-mongodb'

|

||||

import { mongooseAdapter, compatabilityOptions } from '@payloadcms/db-mongodb'

|

||||

|

||||

export default buildConfig({

|

||||

db: mongooseAdapter({

|

||||

url: process.env.DATABASE_URI,

|

||||

// For example, if you're using firestore:

|

||||

...compatibilityOptions.firestore,

|

||||

...compatabilityOptions.firestore,

|

||||

}),

|

||||

})

|

||||

```

|

||||

|

||||

We export compatibility options for [DocumentDB](https://aws.amazon.com/documentdb/), [Azure Cosmos DB](https://azure.microsoft.com/en-us/products/cosmos-db) and [Firestore](https://cloud.google.com/firestore/mongodb-compatibility/docs/overview). Known limitations:

|

||||

We export compatability options for [DocumentDB](https://aws.amazon.com/documentdb/), [Azure Cosmos DB](https://azure.microsoft.com/en-us/products/cosmos-db) and [Firestore](https://cloud.google.com/firestore/mongodb-compatibility/docs/overview). Known limitations:

|

||||

|

||||

- Azure Cosmos DB does not support transactions that update two or more documents in different collections, which is a common case when using Payload (via hooks).

|

||||

- Azure Cosmos DB the root config property `indexSortableFields` must be set to `true`.

|

||||

|

||||

@@ -1,66 +0,0 @@

|

||||

---

|

||||

title: Experimental Features

|

||||

label: Overview

|

||||

order: 10

|

||||

desc: Enable and configure experimental functionality within Payload. These featuresmay be unstable and may change or be removed without notice.

|

||||

keywords: experimental, unstable, beta, preview, features, configuration, Payload, cms, headless, javascript, node, react, nextjs

|

||||

---

|

||||

|

||||

Experimental features allow you to try out new functionality before it becomes a stable part of Payload. These features may still be in active development, may have incomplete functionality, and can change or be removed in future releases without warning.

|

||||

|

||||

## How It Works

|

||||

|

||||

Experimental features are configured via the root-level `experimental` property in your [Payload Config](../configuration/overview). This property contains individual feature flags, each flag can be configured independently, allowing you to selectively opt into specific functionality.

|

||||

|

||||

```ts

|

||||

import { buildConfig } from 'payload'

|

||||

|

||||

const config = buildConfig({

|

||||

// ...

|

||||

experimental: {

|

||||

localizeStatus: true, // highlight-line

|

||||

},

|

||||

})

|

||||

```

|

||||

|

||||

## Experimental Options

|

||||

|

||||

The following options are available:

|

||||

|

||||

| Option | Description |

|

||||

| -------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| **`localizeStatus`** | **Boolean.** When `true`, shows document status per locale in the admin panel instead of always showing the latest overall status. Opt-in for backwards compatibility. Defaults to `false`. |

|

||||

|

||||

This list may change without notice.

|

||||

|

||||

## When to Use Experimental Features

|

||||

|

||||

You might enable an experimental feature when:

|

||||

|

||||

- You want early access to new capabilities before their stable release.

|

||||

- You can accept the risks of using potentially unstable functionality.

|

||||

- You are testing new features in a development or staging environment.

|

||||

- You wish to provide feedback to the Payload team on new functionality.

|

||||

|

||||

If you are working on a production application, carefully evaluate whether the benefits outweigh the risks. For most stable applications, it is recommended to wait until the feature is officially released.

|

||||

|

||||

<Banner type="success">

|

||||

<strong>Tip:</strong> To stay up to date on experimental features or share

|

||||

your feedback, visit the{' '}

|

||||

<a

|

||||

href="https://github.com/payloadcms/payload/discussions"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

Payload GitHub Discussions

|

||||

</a>{' '}

|

||||

or{' '}

|

||||

<a

|

||||

href="https://github.com/payloadcms/payload/issues"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

open an issue

|

||||

</a>

|

||||

.

|

||||

</Banner>

|

||||

@@ -81,7 +81,7 @@ To install a Database Adapter, you can run **one** of the following commands:

|

||||

|

||||

#### 2. Copy Payload files into your Next.js app folder

|

||||

|

||||

Payload installs directly in your Next.js `/app` folder, and you'll need to place some files into that folder for Payload to run. You can copy these files from the [Blank Template](https://github.com/payloadcms/payload/tree/main/templates/blank/src/app/%28payload%29) on GitHub. Once you have the required Payload files in place in your `/app` folder, you should have something like this:

|

||||

Payload installs directly in your Next.js `/app` folder, and you'll need to place some files into that folder for Payload to run. You can copy these files from the [Blank Template](<https://github.com/payloadcms/payload/tree/main/templates/blank/src/app/(payload)>) on GitHub. Once you have the required Payload files in place in your `/app` folder, you should have something like this:

|

||||

|

||||

```plaintext

|

||||

app/

|

||||

|

||||

@@ -162,11 +162,6 @@ const result = await payload.find({

|

||||

})

|

||||

```

|

||||

|

||||

<Banner type="info">

|

||||

`pagination`, `page`, and `limit` are three related properties [documented

|

||||

here](/docs/queries/pagination).

|

||||

</Banner>

|

||||

|

||||

### Find by ID#collection-find-by-id

|

||||

|

||||

```js

|

||||

|

||||

@@ -207,7 +207,7 @@ Everything mentioned above applies to local development as well, but there are a

|

||||

### Enable Turbopack

|

||||

|

||||

<Banner type="warning">

|

||||

**Note:** In the future this will be the default. Use at your own risk.

|

||||

**Note:** In the future this will be the default. Use as your own risk.

|

||||

</Banner>

|

||||

|

||||

Add `--turbo` to your dev script to significantly speed up your local development server start time.

|

||||

|

||||

@@ -79,7 +79,6 @@ formBuilderPlugin({

|

||||

text: true,

|

||||

textarea: true,

|

||||

select: true,

|

||||

radio: true,

|

||||

email: true,

|

||||

state: true,

|

||||

country: true,

|

||||

@@ -294,46 +293,14 @@ Maps to a `textarea` input on your front-end. Used to collect a multi-line strin

|

||||

|

||||

Maps to a `select` input on your front-end. Used to display a list of options.

|

||||

|

||||

| Property | Type | Description |

|

||||

| -------------- | -------- | ------------------------------------------------------------------------------- |

|

||||

| `name` | string | The name of the field. |

|

||||

| `label` | string | The label of the field. |

|

||||

| `defaultValue` | string | The default value of the field. |

|

||||

| `placeholder` | string | The placeholder text for the field. |

|

||||

| `width` | string | The width of the field on the front-end. |

|

||||

| `required` | checkbox | Whether or not the field is required when submitted. |

|

||||

| `options` | array | An array of objects that define the select options. See below for more details. |

|

||||

|

||||

#### Select Options

|

||||

|

||||

Each option in the `options` array defines a selectable choice for the select field.

|

||||

|

||||

| Property | Type | Description |

|

||||

| -------- | ------ | ----------------------------------- |

|

||||

| `label` | string | The display text for the option. |

|

||||

| `value` | string | The value submitted for the option. |

|

||||

|

||||

### Radio

|

||||

|

||||

Maps to radio button inputs on your front-end. Used to allow users to select a single option from a list of choices.

|

||||

|

||||

| Property | Type | Description |

|

||||

| -------------- | -------- | ------------------------------------------------------------------------------ |

|

||||

| `name` | string | The name of the field. |

|

||||

| `label` | string | The label of the field. |

|

||||

| `defaultValue` | string | The default value of the field. |

|

||||

| `width` | string | The width of the field on the front-end. |

|

||||

| `required` | checkbox | Whether or not the field is required when submitted. |

|

||||

| `options` | array | An array of objects that define the radio options. See below for more details. |

|

||||

|

||||

#### Radio Options

|

||||

|

||||

Each option in the `options` array defines a selectable choice for the radio field.

|

||||

|

||||

| Property | Type | Description |

|

||||

| -------- | ------ | ----------------------------------- |

|

||||

| `label` | string | The display text for the option. |

|

||||

| `value` | string | The value submitted for the option. |

|

||||

| Property | Type | Description |

|

||||

| -------------- | -------- | -------------------------------------------------------- |

|

||||

| `name` | string | The name of the field. |

|

||||

| `label` | string | The label of the field. |

|

||||

| `defaultValue` | string | The default value of the field. |

|

||||

| `width` | string | The width of the field on the front-end. |

|

||||

| `required` | checkbox | Whether or not the field is required when submitted. |

|

||||

| `options` | array | An array of objects with `label` and `value` properties. |

|

||||

|

||||

### Email (field)

|

||||

|

||||

|

||||

@@ -80,11 +80,6 @@ type MultiTenantPluginConfig<ConfigTypes = unknown> = {

|

||||

* @default false

|

||||

*/

|

||||

isGlobal?: boolean

|

||||

/**

|

||||

* Opt out of adding the tenant field and place

|

||||

* it manually using the `tenantField` export from the plugin

|

||||

*/

|

||||

customTenantField?: boolean

|

||||

/**

|

||||

* Overrides for the tenant field, will override the entire tenantField configuration

|

||||

*/

|

||||

|

||||

@@ -148,12 +148,6 @@ export const Pages: CollectionConfig<'pages'> = {

|

||||

}

|

||||

```

|

||||

|

||||

<VideoDrawer

|

||||

id="Snqjng_w-QU"

|

||||

label="Watch default populate in action"

|

||||

drawerTitle="How to easily optimize Payload CMS requests with defaultPopulate"

|

||||

/>

|

||||

|

||||

<Banner type="warning">

|

||||

**Important:** When using `defaultPopulate` on a collection with

|

||||

[Uploads](/docs/fields/upload) enabled and you want to select the `url` field,

|

||||

|

||||

@@ -269,13 +269,11 @@ Lexical does not generate accurate type definitions for your richText fields for

|

||||

|

||||

The Rich Text Field editor configuration has an `admin` property with the following options:

|

||||

|

||||

| Property | Description |

|

||||

| ------------------------------- | ----------------------------------------------------------------------------------------------------------- |

|

||||

| **`placeholder`** | Set this property to define a placeholder string for the field. |

|

||||

| **`hideGutter`** | Set this property to `true` to hide this field's gutter within the Admin Panel. |

|

||||

| **`hideInsertParagraphAtEnd`** | Set this property to `true` to hide the "+" button that appears at the end of the editor. |

|

||||

| **`hideDraggableBlockElement`** | Set this property to `true` to hide the draggable element that appears when you hover a node in the editor. |

|

||||

| **`hideAddBlockButton`** | Set this property to `true` to hide the "+" button that appears when you hover a node in the editor. |

|

||||

| Property | Description |

|

||||

| ------------------------------ | ---------------------------------------------------------------------------------------- |

|

||||

| **`placeholder`** | Set this property to define a placeholder string for the field. |

|

||||

| **`hideGutter`** | Set this property to `true` to hide this field's gutter within the Admin Panel. |

|

||||

| **`hideInsertParagraphAtEnd`** | Set this property to `true` to hide the "+" button that appears at the end of the editor |

|

||||

|

||||

### Disable the gutter

|

||||

|

||||

|

||||

@@ -13,8 +13,8 @@ keywords: uploads, images, media, overview, documentation, Content Management Sy

|

||||

</Banner>

|

||||

|

||||

<LightDarkImage

|

||||

srcLight="https://payloadcms.com/images/docs/uploads-overview.jpg"

|

||||

srcDark="https://payloadcms.com/images/docs/uploads-overview.jpg"

|

||||

srcLight="https://payloadcms.com/images/docs/upload-admin.jpg"

|

||||

srcDark="https://payloadcms.com/images/docs/upload-admin.jpg"

|

||||

alt="Shows an Upload enabled collection in the Payload Admin Panel"

|

||||

caption="Admin Panel screenshot depicting a Media Collection with Upload enabled"

|

||||

/>

|

||||

|

||||

@@ -12,7 +12,7 @@ Extending on Payload's [Draft](/docs/versions/drafts) functionality, you can con

|

||||

Autosave relies on Versions and Drafts being enabled in order to function.

|

||||

</Banner>

|

||||

|

||||

|

||||

|

||||

_If Autosave is enabled, drafts will be created automatically as the document is modified and the Admin UI adds an indicator describing when the document was last saved to the top right of the sidebar._

|

||||

|

||||

## Options

|

||||

|

||||

@@ -14,7 +14,7 @@ Payload's Draft functionality builds on top of the Versions functionality to all

|

||||

|

||||

By enabling Versions with Drafts, your collections and globals can maintain _newer_, and _unpublished_ versions of your documents. It's perfect for cases where you might want to work on a document, update it and save your progress, but not necessarily make it publicly published right away. Drafts are extremely helpful when building preview implementations.

|

||||

|

||||

|

||||

|

||||

_If Drafts are enabled, the typical Save button is replaced with new actions which allow you to either save a draft, or publish your changes._

|

||||

|

||||

## Options

|

||||

|

||||

@@ -13,7 +13,7 @@ keywords: version history, revisions, audit log, draft, publish, restore, autosa

|

||||

|

||||

When enabled, Payload will automatically scaffold a new Collection in your database to store versions of your document(s) over time, and the Admin UI will be extended with additional views that allow you to browse document versions, view diffs in order to see exactly what has changed in your documents (and when they changed), and restore documents back to prior versions easily.

|

||||

|

||||

|

||||

|

||||

_Comparing an old version to a newer version of a document_

|

||||

|

||||

**With Versions, you can:**

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "payload-monorepo",

|

||||

"version": "3.54.0",

|

||||

"version": "3.50.0",

|

||||

"private": true,

|

||||

"type": "module",

|

||||

"workspaces": [

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@payloadcms/admin-bar",

|

||||

"version": "3.54.0",

|

||||

"version": "3.50.0",

|

||||

"description": "An admin bar for React apps using Payload",

|

||||

"homepage": "https://payloadcms.com",

|

||||

"repository": {

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "create-payload-app",

|

||||

"version": "3.54.0",

|

||||

"version": "3.50.0",

|

||||

"homepage": "https://payloadcms.com",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@payloadcms/db-mongodb",

|

||||

"version": "3.54.0",

|

||||

"version": "3.50.0",

|

||||

"description": "The officially supported MongoDB database adapter for Payload",

|

||||

"homepage": "https://payloadcms.com",

|

||||

"repository": {

|

||||

|

||||

@@ -35,12 +35,7 @@ export const connect: Connect = async function connect(

|

||||

}

|

||||

|

||||

try {

|

||||

if (!this.connection) {

|

||||

this.connection = await mongoose.createConnection(urlToConnect, connectionOptions).asPromise()

|

||||

}

|

||||

|

||||

await this.connection.openUri(urlToConnect, connectionOptions)

|

||||

|

||||

this.connection = (await mongoose.connect(urlToConnect, connectionOptions)).connection

|

||||

if (this.useAlternativeDropDatabase) {

|

||||

if (this.connection.db) {

|

||||

// Firestore doesn't support dropDatabase, so we monkey patch

|

||||

@@ -80,8 +75,7 @@ export const connect: Connect = async function connect(

|

||||

if (!hotReload) {

|

||||

if (process.env.PAYLOAD_DROP_DATABASE === 'true') {

|

||||

this.payload.logger.info('---- DROPPING DATABASE ----')

|

||||

await this.connection.dropDatabase()

|

||||

|

||||

await mongoose.connection.dropDatabase()

|

||||

this.payload.logger.info('---- DROPPED DATABASE ----')

|

||||

}

|

||||

}

|

||||

|

||||

@@ -17,16 +17,10 @@ export const create: Create = async function create(

|

||||

|

||||

const options: CreateOptions = {

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

let doc

|

||||

|

||||

if (!data.createdAt) {

|

||||

data.createdAt = new Date().toISOString()

|

||||

}

|

||||

|

||||

transform({

|

||||

adapter: this,

|

||||

data,

|

||||

|

||||

@@ -14,10 +14,6 @@ export const createGlobal: CreateGlobal = async function createGlobal(

|

||||

) {

|

||||

const { globalConfig, Model } = getGlobal({ adapter: this, globalSlug })

|

||||

|

||||

if (!data.createdAt) {

|

||||

;(data as any).createdAt = new Date().toISOString()

|

||||

}

|

||||

|

||||

transform({

|

||||

adapter: this,

|

||||

data,

|

||||

@@ -28,8 +24,6 @@ export const createGlobal: CreateGlobal = async function createGlobal(

|

||||

|

||||

const options: CreateOptions = {

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

let [result] = (await Model.create([data], options)) as any

|

||||

|

||||

@@ -12,7 +12,6 @@ export const createGlobalVersion: CreateGlobalVersion = async function createGlo

|

||||

autosave,

|

||||

createdAt,

|

||||

globalSlug,

|

||||

localeStatus,

|

||||

parent,

|

||||

publishedLocale,

|

||||

req,

|

||||

@@ -26,24 +25,18 @@ export const createGlobalVersion: CreateGlobalVersion = async function createGlo

|

||||

|

||||

const options = {

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

const data = {

|

||||

autosave,

|

||||

createdAt,

|

||||

latest: true,

|

||||

localeStatus,

|

||||

parent,

|

||||

publishedLocale,

|

||||

snapshot,

|

||||

updatedAt,

|

||||

version: versionData,

|

||||

}

|

||||

if (!data.createdAt) {

|

||||

data.createdAt = new Date().toISOString()

|

||||

}

|

||||

|

||||

const fields = buildVersionGlobalFields(this.payload.config, globalConfig)

|

||||

|

||||

|

||||

@@ -12,7 +12,6 @@ export const createVersion: CreateVersion = async function createVersion(

|

||||

autosave,

|

||||

collectionSlug,

|

||||

createdAt,

|

||||

localeStatus,

|

||||

parent,

|

||||

publishedLocale,

|

||||

req,

|

||||

@@ -30,24 +29,18 @@ export const createVersion: CreateVersion = async function createVersion(

|

||||

|

||||

const options = {

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

const data = {

|

||||

autosave,

|

||||

createdAt,

|

||||

latest: true,

|

||||

localeStatus,

|

||||

parent,

|

||||

publishedLocale,

|

||||

snapshot,

|

||||

updatedAt,

|

||||

version: versionData,

|

||||

}

|

||||

if (!data.createdAt) {

|

||||

data.createdAt = new Date().toISOString()

|

||||

}

|

||||

|

||||

const fields = buildVersionCollectionFields(this.payload.config, collectionConfig)

|

||||

|

||||

|

||||

@@ -1,11 +1,11 @@

|

||||

import type { Destroy } from 'payload'

|

||||

|

||||

import mongoose from 'mongoose'

|

||||

|

||||

import type { MongooseAdapter } from './index.js'

|

||||

|

||||

export const destroy: Destroy = async function destroy(this: MongooseAdapter) {

|

||||

await this.connection.close()

|

||||

await mongoose.disconnect()

|

||||

|

||||

for (const name of Object.keys(this.connection.models)) {

|

||||

this.connection.deleteModel(name)

|

||||

}

|

||||

Object.keys(mongoose.models).map((model) => mongoose.deleteModel(model))

|

||||

}

|

||||

|

||||

@@ -331,7 +331,7 @@ export function mongooseAdapter({

|

||||

}

|

||||

}

|

||||

|

||||

export { compatibilityOptions } from './utilities/compatibilityOptions.js'

|

||||

export { compatabilityOptions } from './utilities/compatabilityOptions.js'

|

||||

|

||||

/**

|

||||

* Attempt to find migrations directory.

|

||||

|

||||

@@ -19,14 +19,11 @@ import { getBuildQueryPlugin } from './queries/getBuildQueryPlugin.js'

|

||||

import { getDBName } from './utilities/getDBName.js'

|

||||

|

||||

export const init: Init = function init(this: MongooseAdapter) {

|

||||

// Always create a scoped, **unopened** connection object

|

||||

// (no URI here; models compile per-connection and do not require an open socket)

|

||||

this.connection ??= mongoose.createConnection()

|

||||

|

||||

this.payload.config.collections.forEach((collection: SanitizedCollectionConfig) => {

|

||||

const schemaOptions = this.collectionsSchemaOptions?.[collection.slug]

|

||||

|

||||

const schema = buildCollectionSchema(collection, this.payload, schemaOptions)

|

||||

|

||||

if (collection.versions) {

|

||||

const versionModelName = getDBName({ config: collection, versions: true })

|

||||

|

||||

@@ -58,7 +55,7 @@ export const init: Init = function init(this: MongooseAdapter) {

|

||||

const versionCollectionName =

|

||||

this.autoPluralization === true && !collection.dbName ? undefined : versionModelName

|

||||

|

||||

this.versions[collection.slug] = this.connection.model(

|

||||

this.versions[collection.slug] = mongoose.model(

|

||||

versionModelName,

|

||||

versionSchema,

|

||||

versionCollectionName,

|

||||

@@ -69,14 +66,14 @@ export const init: Init = function init(this: MongooseAdapter) {

|

||||

const collectionName =

|

||||

this.autoPluralization === true && !collection.dbName ? undefined : modelName

|

||||

|

||||

this.collections[collection.slug] = this.connection.model<any>(

|

||||

this.collections[collection.slug] = mongoose.model<any>(

|

||||

modelName,

|

||||

schema,

|

||||

collectionName,

|

||||

) as CollectionModel

|

||||

})

|

||||

|

||||

this.globals = buildGlobalModel(this) as GlobalModel

|

||||

this.globals = buildGlobalModel(this.payload) as GlobalModel

|

||||

|

||||

this.payload.config.globals.forEach((global) => {

|

||||

if (global.versions) {

|

||||

@@ -104,7 +101,7 @@ export const init: Init = function init(this: MongooseAdapter) {

|

||||

}),

|

||||

)

|

||||

|

||||

this.versions[global.slug] = this.connection.model<any>(

|

||||

this.versions[global.slug] = mongoose.model<any>(

|

||||

versionModelName,

|

||||

versionSchema,

|

||||

versionModelName,

|

||||

|

||||

@@ -1,13 +1,14 @@

|

||||

import type { Payload } from 'payload'

|

||||

|

||||

import mongoose from 'mongoose'

|

||||

|

||||

import type { MongooseAdapter } from '../index.js'

|

||||

import type { GlobalModel } from '../types.js'

|

||||

|

||||

import { getBuildQueryPlugin } from '../queries/getBuildQueryPlugin.js'

|

||||

import { buildSchema } from './buildSchema.js'

|

||||

|

||||

export const buildGlobalModel = (adapter: MongooseAdapter): GlobalModel | null => {

|

||||

if (adapter.payload.config.globals && adapter.payload.config.globals.length > 0) {

|

||||

export const buildGlobalModel = (payload: Payload): GlobalModel | null => {

|

||||

if (payload.config.globals && payload.config.globals.length > 0) {

|

||||

const globalsSchema = new mongoose.Schema(

|

||||

{},

|

||||

{ discriminatorKey: 'globalType', minimize: false, timestamps: true },

|

||||

@@ -15,13 +16,9 @@ export const buildGlobalModel = (adapter: MongooseAdapter): GlobalModel | null =

|

||||

|

||||

globalsSchema.plugin(getBuildQueryPlugin())

|

||||

|

||||

const Globals = adapter.connection.model(

|

||||

'globals',

|

||||

globalsSchema,

|

||||

'globals',

|

||||

) as unknown as GlobalModel

|

||||

const Globals = mongoose.model('globals', globalsSchema, 'globals') as unknown as GlobalModel

|

||||

|

||||

Object.values(adapter.payload.config.globals).forEach((globalConfig) => {

|

||||

Object.values(payload.config.globals).forEach((globalConfig) => {

|

||||

const globalSchema = buildSchema({

|

||||

buildSchemaOptions: {

|

||||

options: {

|

||||

@@ -29,7 +26,7 @@ export const buildGlobalModel = (adapter: MongooseAdapter): GlobalModel | null =

|

||||

},

|

||||

},

|

||||

configFields: globalConfig.fields,

|

||||

payload: adapter.payload,

|

||||

payload,

|

||||

})

|

||||

Globals.discriminator(globalConfig.slug, globalSchema)

|

||||

})

|

||||

|

||||

@@ -63,10 +63,7 @@ const migrateModelWithBatching = async ({

|

||||

},

|

||||

},

|

||||

})),

|

||||

{

|

||||

session, // Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

},

|

||||

{ session },

|

||||

)

|

||||

|

||||

skip += batchSize

|

||||

|

||||

@@ -179,13 +179,6 @@ export const queryDrafts: QueryDrafts = async function queryDrafts(

|

||||

|

||||

for (let i = 0; i < result.docs.length; i++) {

|

||||

const id = result.docs[i].parent

|

||||

|

||||

const localeStatus = result.docs[i].localeStatus || {}

|

||||

if (locale && localeStatus[locale]) {

|

||||

result.docs[i].status = localeStatus[locale]

|

||||

result.docs[i].version._status = localeStatus[locale]

|

||||

}

|

||||

|

||||

result.docs[i] = result.docs[i].version ?? {}

|

||||

result.docs[i].id = id

|

||||

}

|

||||

|

||||

@@ -26,8 +26,6 @@ export const updateGlobal: UpdateGlobal = async function updateGlobal(

|

||||

select,

|

||||

}),

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

transform({ adapter: this, data, fields, globalSlug, operation: 'write' })

|

||||

|

||||

@@ -39,8 +39,6 @@ export async function updateGlobalVersion<T extends TypeWithID>(

|

||||

select,

|

||||

}),

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

const query = await buildQuery({

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

import type { MongooseUpdateQueryOptions, UpdateQuery } from 'mongoose'

|

||||

import type { MongooseUpdateQueryOptions } from 'mongoose'

|

||||

import type { Job, UpdateJobs, Where } from 'payload'

|

||||

|

||||

import type { MongooseAdapter } from './index.js'

|

||||

@@ -14,13 +14,9 @@ export const updateJobs: UpdateJobs = async function updateMany(

|

||||

this: MongooseAdapter,

|

||||

{ id, data, limit, req, returning, sort: sortArg, where: whereArg },

|

||||

) {

|

||||

if (

|

||||

!(data?.log as object[])?.length &&

|

||||

!(data.log && typeof data.log === 'object' && '$push' in data.log)

|

||||

) {

|

||||

if (!(data?.log as object[])?.length) {

|

||||

delete data.log

|

||||

}

|

||||

|

||||

const where = id ? { id: { equals: id } } : (whereArg as Where)

|

||||

|

||||

const { collectionConfig, Model } = getCollection({

|

||||

@@ -40,8 +36,6 @@ export const updateJobs: UpdateJobs = async function updateMany(

|

||||

lean: true,

|

||||

new: true,

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

let query = await buildQuery({

|

||||

@@ -51,44 +45,17 @@ export const updateJobs: UpdateJobs = async function updateMany(

|

||||

where,

|

||||

})

|

||||

|

||||

let updateData: UpdateQuery<any> = data

|

||||

|

||||

const $inc: Record<string, number> = {}

|

||||

const $push: Record<string, { $each: any[] } | any> = {}

|

||||

|

||||

transform({

|

||||

$inc,

|

||||

$push,

|

||||

adapter: this,

|

||||

data,

|

||||

fields: collectionConfig.fields,

|

||||

operation: 'write',

|

||||

})

|

||||

|

||||

const updateOps: UpdateQuery<any> = {}

|

||||

|

||||

if (Object.keys($inc).length) {

|

||||

updateOps.$inc = $inc

|

||||

}

|

||||

if (Object.keys($push).length) {

|

||||

updateOps.$push = $push

|

||||

}

|

||||

if (Object.keys(updateOps).length) {

|

||||

updateOps.$set = updateData

|

||||

updateData = updateOps

|

||||

}

|

||||

transform({ adapter: this, data, fields: collectionConfig.fields, operation: 'write' })

|

||||

|

||||

let result: Job[] = []

|

||||

|

||||

try {

|

||||

if (id) {

|

||||

if (returning === false) {

|

||||

await Model.updateOne(query, updateData, options)

|

||||

transform({ adapter: this, data, fields: collectionConfig.fields, operation: 'read' })

|

||||

|

||||

await Model.updateOne(query, data, options)

|

||||

return null

|

||||

} else {

|

||||

const doc = await Model.findOneAndUpdate(query, updateData, options)

|

||||

const doc = await Model.findOneAndUpdate(query, data, options)

|

||||

result = doc ? [doc] : []

|

||||

}

|

||||

} else {

|

||||

@@ -105,7 +72,7 @@ export const updateJobs: UpdateJobs = async function updateMany(

|

||||

query = { _id: { $in: documentsToUpdate.map((doc) => doc._id) } }

|

||||

}

|

||||

|

||||

await Model.updateMany(query, updateData, options)

|

||||

await Model.updateMany(query, data, options)

|

||||

|

||||

if (returning === false) {

|

||||

return null

|

||||

|

||||

@@ -58,8 +58,6 @@ export const updateMany: UpdateMany = async function updateMany(

|

||||

select,

|

||||

}),

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

let query = await buildQuery({

|

||||

|

||||

@@ -38,8 +38,6 @@ export const updateOne: UpdateOne = async function updateOne(

|

||||

select,

|

||||

}),

|

||||

session: await getSession(this, req),

|

||||

// Timestamps are manually added by the write transform

|

||||

timestamps: false,

|

||||

}

|

||||

|

||||

const query = await buildQuery({

|

||||

@@ -58,18 +56,11 @@ export const updateOne: UpdateOne = async function updateOne(

|

||||

const $push: Record<string, { $each: any[] } | any> = {}

|

||||

|

||||

transform({ $inc, $push, adapter: this, data, fields, operation: 'write' })

|

||||

|

||||

const updateOps: UpdateQuery<any> = {}

|

||||

|

||||

if (Object.keys($inc).length) {

|

||||

updateOps.$inc = $inc

|