Compare commits

2 Commits

perf/delet

...

postgres-d

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

0333e2cd1c | ||

|

|

bbf0c2474d |

14

.github/workflows/audit-dependencies.sh

vendored

14

.github/workflows/audit-dependencies.sh

vendored

@@ -1,20 +1,18 @@

|

||||

#!/bin/bash

|

||||

|

||||

severity=${1:-"high"}

|

||||

severity=${1:-"critical"}

|

||||

audit_json=$(pnpm audit --prod --json)

|

||||

output_file="audit_output.json"

|

||||

|

||||

echo "Auditing for ${severity} vulnerabilities..."

|

||||

|

||||

audit_json=$(pnpm audit --prod --json)

|

||||

|

||||

echo "${audit_json}" | jq --arg severity "${severity}" '

|

||||

.advisories | to_entries |

|

||||

map(select(.value.patched_versions != "<0.0.0" and (.value.severity == $severity or ($severity == "high" and .value.severity == "critical"))) |

|

||||

map(select(.value.patched_versions != "<0.0.0" and .value.severity == $severity) |

|

||||

{

|

||||

package: .value.module_name,

|

||||

vulnerable: .value.vulnerable_versions,

|

||||

fixed_in: .value.patched_versions,

|

||||

findings: .value.findings

|

||||

fixed_in: .value.patched_versions

|

||||

}

|

||||

)

|

||||

' >$output_file

|

||||

@@ -24,11 +22,7 @@ audit_length=$(jq 'length' $output_file)

|

||||

if [[ "${audit_length}" -gt "0" ]]; then

|

||||

echo "Actionable vulnerabilities found in the following packages:"

|

||||

jq -r '.[] | "\u001b[1m\(.package)\u001b[0m vulnerable in \u001b[31m\(.vulnerable)\u001b[0m fixed in \u001b[32m\(.fixed_in)\u001b[0m"' $output_file | while read -r line; do echo -e "$line"; done

|

||||

echo ""

|

||||

echo "Output written to ${output_file}"

|

||||

cat $output_file

|

||||

echo ""

|

||||

echo "This script can be rerun with: './.github/workflows/audit-dependencies.sh $severity'"

|

||||

exit 1

|

||||

else

|

||||

echo "No actionable vulnerabilities"

|

||||

|

||||

4

.github/workflows/audit-dependencies.yml

vendored

4

.github/workflows/audit-dependencies.yml

vendored

@@ -9,7 +9,7 @@ on:

|

||||

audit-level:

|

||||

description: The level of audit to run (low, moderate, high, critical)

|

||||

required: false

|

||||

default: high

|

||||

default: critical

|

||||

debug:

|

||||

description: Enable debug logging

|

||||

required: false

|

||||

@@ -46,7 +46,7 @@ jobs:

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": "🚨 Actionable vulnerabilities found: <https://github.com/${{ github.repository }}/actions/runs/${{ github.run_id }}|View Script Run Details>"

|

||||

"text": "🚨 Actionable vulnerabilities found: <https://github.com/${{ github.repository }}/actions/runs/${{ github.run_id }}|View Details>"

|

||||

}

|

||||

},

|

||||

]

|

||||

|

||||

11

.github/workflows/main.yml

vendored

11

.github/workflows/main.yml

vendored

@@ -6,6 +6,7 @@ on:

|

||||

- opened

|

||||

- reopened

|

||||

- synchronize

|

||||

- labeled

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

@@ -152,7 +153,6 @@ jobs:

|

||||

matrix:

|

||||

database:

|

||||

- mongodb

|

||||

- firestore

|

||||

- postgres

|

||||

- postgres-custom-schema

|

||||

- postgres-uuid

|

||||

@@ -283,8 +283,6 @@ jobs:

|

||||

- fields__collections__Text

|

||||

- fields__collections__UI

|

||||

- fields__collections__Upload

|

||||

- group-by

|

||||

- folders

|

||||

- hooks

|

||||

- lexical__collections__Lexical__e2e__main

|

||||

- lexical__collections__Lexical__e2e__blocks

|

||||

@@ -303,7 +301,6 @@ jobs:

|

||||

- plugin-nested-docs

|

||||

- plugin-seo

|

||||

- sort

|

||||

- trash

|

||||

- versions

|

||||

- uploads

|

||||

env:

|

||||

@@ -370,7 +367,6 @@ jobs:

|

||||

# report-tag: ${{ matrix.suite }}

|

||||

# job-summary: true

|

||||

|

||||

# This is unused, keeping it here for reference and possibly enabling in the future

|

||||

tests-e2e-turbo:

|

||||

runs-on: ubuntu-24.04

|

||||

needs: [changes, build]

|

||||

@@ -421,8 +417,6 @@ jobs:

|

||||

- fields__collections__Text

|

||||

- fields__collections__UI

|

||||

- fields__collections__Upload

|

||||

- group-by

|

||||

- folders

|

||||

- hooks

|

||||

- lexical__collections__Lexical__e2e__main

|

||||

- lexical__collections__Lexical__e2e__blocks

|

||||

@@ -441,7 +435,6 @@ jobs:

|

||||

- plugin-nested-docs

|

||||

- plugin-seo

|

||||

- sort

|

||||

- trash

|

||||

- versions

|

||||

- uploads

|

||||

env:

|

||||

@@ -725,8 +718,6 @@ jobs:

|

||||

DO_NOT_TRACK: 1 # Disable Turbopack telemetry

|

||||

|

||||

- name: Analyze esbuild bundle size

|

||||

# Temporarily disable this for community PRs until this can be implemented in a separate workflow

|

||||

if: github.event.pull_request.head.repo.fork == false

|

||||

uses: exoego/esbuild-bundle-analyzer@v1

|

||||

with:

|

||||

metafiles: 'packages/payload/meta_index.json,packages/payload/meta_shared.json,packages/ui/meta_client.json,packages/ui/meta_shared.json,packages/next/meta_index.json,packages/richtext-lexical/meta_client.json'

|

||||

|

||||

3

.github/workflows/post-release.yml

vendored

3

.github/workflows/post-release.yml

vendored

@@ -17,9 +17,6 @@ env:

|

||||

|

||||

jobs:

|

||||

post_release:

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

runs-on: ubuntu-24.04

|

||||

if: ${{ github.event_name != 'workflow_dispatch' }}

|

||||

steps:

|

||||

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

@@ -331,7 +331,5 @@ test/databaseAdapter.js

|

||||

test/.localstack

|

||||

test/google-cloud-storage

|

||||

test/azurestoragedata/

|

||||

/media-without-delete-access

|

||||

|

||||

|

||||

licenses.csv

|

||||

|

||||

7

.vscode/launch.json

vendored

7

.vscode/launch.json

vendored

@@ -139,13 +139,6 @@

|

||||

"request": "launch",

|

||||

"type": "node-terminal"

|

||||

},

|

||||

{

|

||||

"command": "pnpm tsx --no-deprecation test/dev.ts trash",

|

||||

"cwd": "${workspaceFolder}",

|

||||

"name": "Run Dev Trash",

|

||||

"request": "launch",

|

||||

"type": "node-terminal"

|

||||

},

|

||||

{

|

||||

"command": "pnpm tsx --no-deprecation test/dev.ts uploads",

|

||||

"cwd": "${workspaceFolder}",

|

||||

|

||||

@@ -77,9 +77,13 @@ If you wish to use your own MongoDB database for the `test` directory instead of

|

||||

|

||||

### Using Postgres

|

||||

|

||||

If you have postgres installed on your system, you can also run the test suites using postgres. By default, mongodb is used.

|

||||

Our test suites supports automatic PostgreSQL + PostGIS setup using Docker. No local PostgreSQL installation required. By default, mongodb is used.

|

||||

|

||||

To do that, simply set the `PAYLOAD_DATABASE` environment variable to `postgres`.

|

||||

To use postgres, simply set the `PAYLOAD_DATABASE` environment variable to `postgres`.

|

||||

|

||||

```bash

|

||||

PAYLOAD_DATABASE=postgres pnpm dev {suite}

|

||||

```

|

||||

|

||||

### Running the e2e and int tests

|

||||

|

||||

|

||||

@@ -77,7 +77,7 @@ All auto-generated files will contain the following comments at the top of each

|

||||

|

||||

## Admin Options

|

||||

|

||||

All root-level options for the Admin Panel are defined in your [Payload Config](../configuration/overview) under the `admin` property:

|

||||

All options for the Admin Panel are defined in your [Payload Config](../configuration/overview) under the `admin` property:

|

||||

|

||||

```ts

|

||||

import { buildConfig } from 'payload'

|

||||

@@ -107,7 +107,6 @@ The following options are available:

|

||||

| `suppressHydrationWarning` | If set to `true`, suppresses React hydration mismatch warnings during the hydration of the root `<html>` tag. Defaults to `false`. |

|

||||

| `theme` | Restrict the Admin Panel theme to use only one of your choice. Default is `all`. |

|

||||

| `timezones` | Configure the timezone settings for the admin panel. [More details](#timezones) |

|

||||

| `toast` | Customize the handling of toast messages within the Admin Panel. [More details](#toasts) |

|

||||

| `user` | The `slug` of the Collection that you want to allow to login to the Admin Panel. [More details](#the-admin-user-collection). |

|

||||

|

||||

<Banner type="success">

|

||||

@@ -299,20 +298,3 @@ We validate the supported timezones array by checking the value against the list

|

||||

`timezone: true`. See [Date Fields](../fields/overview#date) for more

|

||||

information.

|

||||

</Banner>

|

||||

|

||||

## Toast

|

||||

|

||||

The `admin.toast` configuration allows you to customize the handling of toast messages within the Admin Panel, such as increasing the duration they are displayed and limiting the number of visible toasts at once.

|

||||

|

||||

<Banner type="info">

|

||||

**Note:** The Admin Panel currently uses the

|

||||

[Sonner](https://sonner.emilkowal.ski) library for toast notifications.

|

||||

</Banner>

|

||||

|

||||

The following options are available for the `admin.toast` configuration:

|

||||

|

||||

| Option | Description | Default |

|

||||

| ---------- | ---------------------------------------------------------------------------------------------------------------- | ------- |

|

||||

| `duration` | The length of time (in milliseconds) that a toast message is displayed. | `4000` |

|

||||

| `expand` | If `true`, will expand the message stack so that all messages are shown simultaneously without user interaction. | `false` |

|

||||

| `limit` | The maximum number of toasts that can be visible on the screen at once. | `5` |

|

||||

|

||||

@@ -739,7 +739,7 @@ The `useDocumentInfo` hook provides information about the current document being

|

||||

| **`lastUpdateTime`** | Timestamp of the last update to the document. |

|

||||

| **`mostRecentVersionIsAutosaved`** | Whether the most recent version is an autosaved version. |

|

||||

| **`preferencesKey`** | The `preferences` key to use when interacting with document-level user preferences. [More details](./preferences). |

|

||||

| **`data`** | The saved data of the document. |

|

||||

| **`savedDocumentData`** | The saved data of the document. |

|

||||

| **`setDocFieldPreferences`** | Method to set preferences for a specific field. [More details](./preferences). |

|

||||

| **`setDocumentTitle`** | Method to set the document title. |

|

||||

| **`setHasPublishedDoc`** | Method to update whether the document has been published. |

|

||||

|

||||

@@ -33,7 +33,7 @@ export const Users: CollectionConfig = {

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

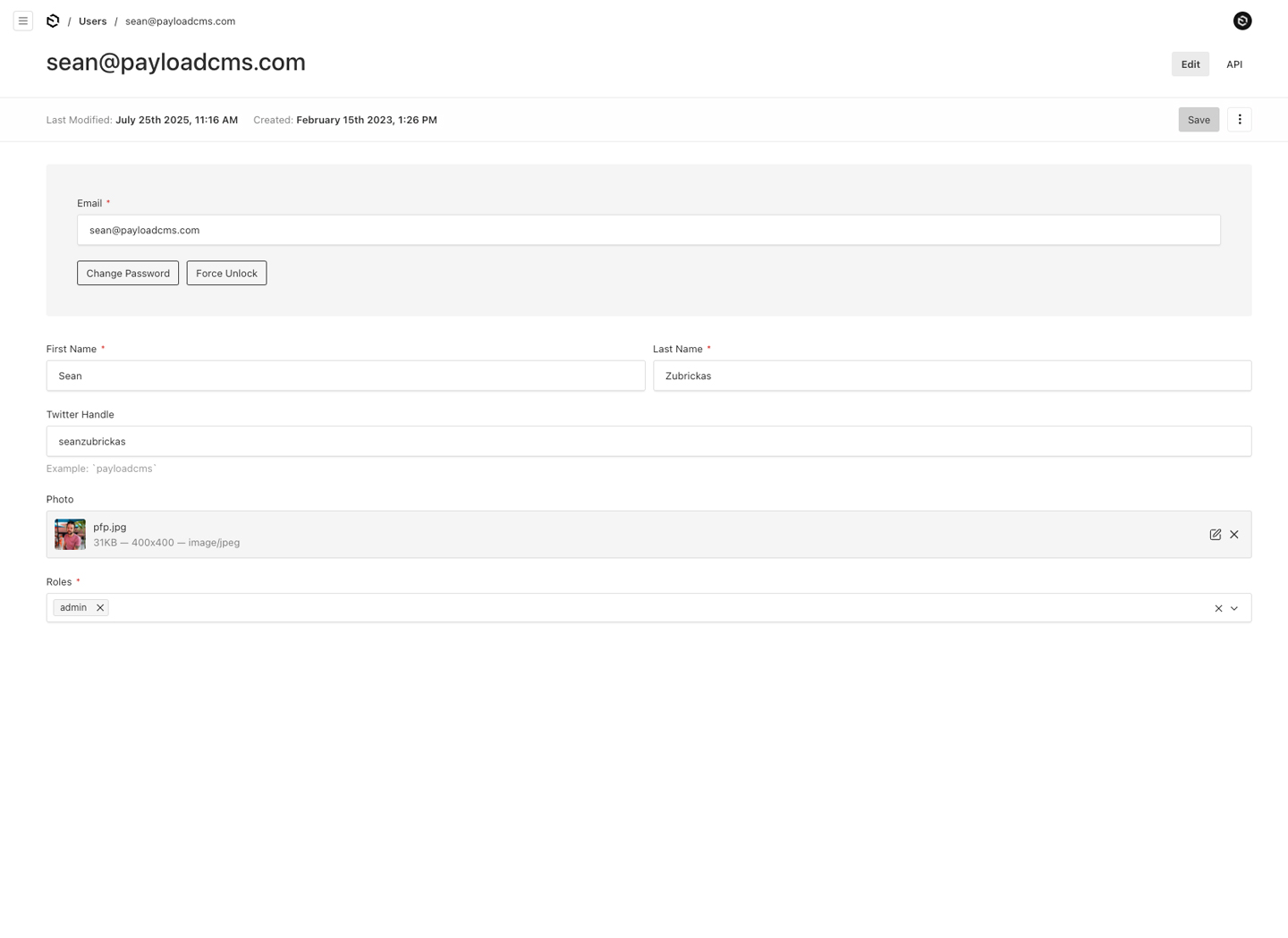

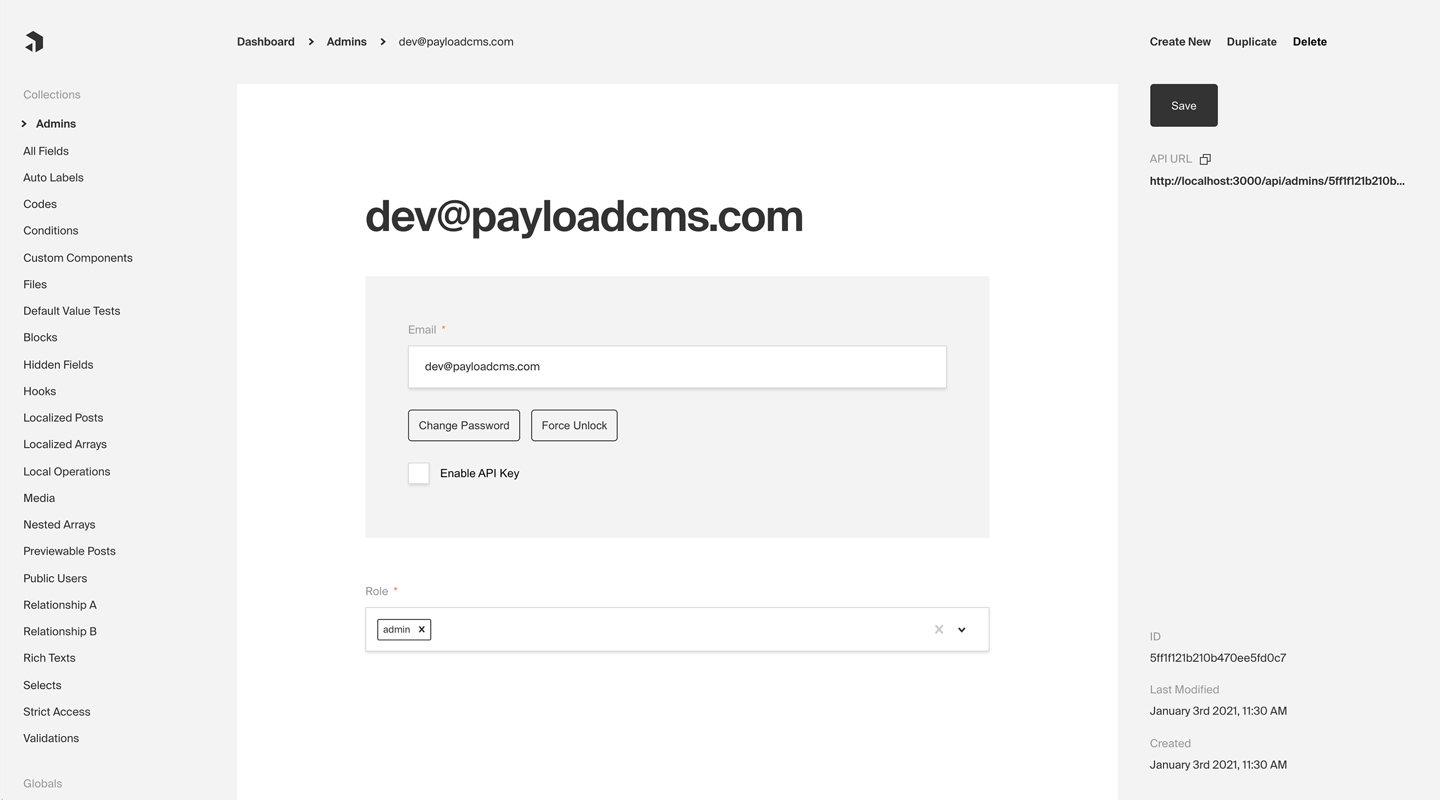

_Admin Panel screenshot depicting an Admins Collection with Auth enabled_

|

||||

|

||||

## Config Options

|

||||

|

||||

62

docs/cloud/configuration.mdx

Normal file

62

docs/cloud/configuration.mdx

Normal file

@@ -0,0 +1,62 @@

|

||||

---

|

||||

title: Project Configuration

|

||||

label: Configuration

|

||||

order: 20

|

||||

desc: Quickly configure and deploy your Payload Cloud project in a few simple steps.

|

||||

keywords: configuration, config, settings, project, cloud, payload cloud, deploy, deployment

|

||||

---

|

||||

|

||||

## Select your plan

|

||||

|

||||

Once you have created a project, you will need to select your plan. This will determine the resources that are allocated to your project and the features that are available to you.

|

||||

|

||||

<Banner type="success">

|

||||

Note: All Payload Cloud teams that deploy a project require a card on file.

|

||||

This helps us prevent fraud and abuse on our platform. If you select a plan

|

||||

with a free trial, you will not be charged until your trial period is over.

|

||||

We’ll remind you 7 days before your trial ends and you can cancel anytime.

|

||||

</Banner>

|

||||

|

||||

## Project Details

|

||||

|

||||

| Option | Description |

|

||||

| ---------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| **Region** | Select the region closest to your audience. This will ensure the fastest communication between your data and your client. |

|

||||

| **Project Name** | A name for your project. You can change this at any time. |

|

||||

| **Project Slug** | Choose a unique slug to identify your project. This needs to be unique for your team and you can change it any time. |

|

||||

| **Team** | Select the team you want to create the project under. If this is your first project, a personal team will be created for you automatically. You can modify your team settings and invite new members at any time from the Team Settings page. |

|

||||

|

||||

## Build Settings

|

||||

|

||||

If you are deploying a new project from a template, the following settings will be automatically configured for you. If you are using your own repository, you need to make sure your build settings are accurate for your project to deploy correctly.

|

||||

|

||||

| Option | Description |

|

||||

| -------------------- | ----------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| **Root Directory** | The folder where your `package.json` file lives. |

|

||||

| **Install Command** | The command used to install your modules, for example: `yarn install` or `npm install` |

|

||||

| **Build Command** | The command used to build your application, for example: `yarn build` or `npm run build` |

|

||||

| **Serve Command** | The command used to serve your application, for example: `yarn serve` or `npm run serve` |

|

||||

| **Branch to Deploy** | Select the branch of your repository that you want to deploy from. This is the branch that will be used to build your project when you commit new changes. |

|

||||

| **Default Domain** | Set a default domain for your project. This must be unique and you will not able to change it. You can always add a custom domain later in your project settings. |

|

||||

|

||||

## Environment Variables

|

||||

|

||||

Any of the features in Payload Cloud that require environment variables will automatically be provided to your application. If your app requires any custom environment variables, you can set them here.

|

||||

|

||||

<Banner type="warning">

|

||||

Note: For security reasons, any variables you wish to provide to the [Admin

|

||||

Panel](../admin/overview) must be prefixed with `NEXT_PUBLIC_`. Learn more

|

||||

[here](../configuration/environment-vars).

|

||||

</Banner>

|

||||

|

||||

## Payment

|

||||

|

||||

Payment methods can be set per project and can be updated any time. You can use team’s default payment method, or add a new one. Modify your payment methods in your Project settings / Team settings.

|

||||

|

||||

<Banner type="success">

|

||||

**Note:** All Payload Cloud teams that deploy a project require a card on

|

||||

file. This helps us prevent fraud and abuse on our platform. If you select a

|

||||

plan with a free trial, you will not be charged until your trial period is

|

||||

over. We’ll remind you 7 days before your trial ends and you can cancel

|

||||

anytime.

|

||||

</Banner>

|

||||

53

docs/cloud/creating-a-project.mdx

Normal file

53

docs/cloud/creating-a-project.mdx

Normal file

@@ -0,0 +1,53 @@

|

||||

---

|

||||

title: Getting Started

|

||||

label: Getting Started

|

||||

order: 10

|

||||

desc: Get started with Payload Cloud, a deployment solution specifically designed for Node + MongoDB applications.

|

||||

keywords: cloud, hosted, database, storage, email, deployment, serverless, node, mongodb, s3, aws, cloudflare, atlas, resend, payload, cms

|

||||

---

|

||||

|

||||

A deployment solution specifically designed for Node.js + MongoDB applications, offering seamless deployment of your entire stack in one place. You can get started in minutes with a one-click template or bring your own codebase with you.

|

||||

|

||||

Payload Cloud offers various plans tailored to meet your specific needs, including a MongoDB Atlas database, S3 file storage, and email delivery powered by [Resend](https://resend.com). To see a full breakdown of features and plans, see our [Cloud Pricing page](https://payloadcms.com/cloud-pricing).

|

||||

|

||||

To get started, you first need to create an account. Head over to [the login screen](https://payloadcms.com/login) and **Register for Free**.

|

||||

|

||||

<Banner type="success">

|

||||

To create your first project, you can either select [a

|

||||

template](#starting-from-a-template) or [import an existing

|

||||

project](#importing-from-an-existing-codebase) from GitHub.

|

||||

</Banner>

|

||||

|

||||

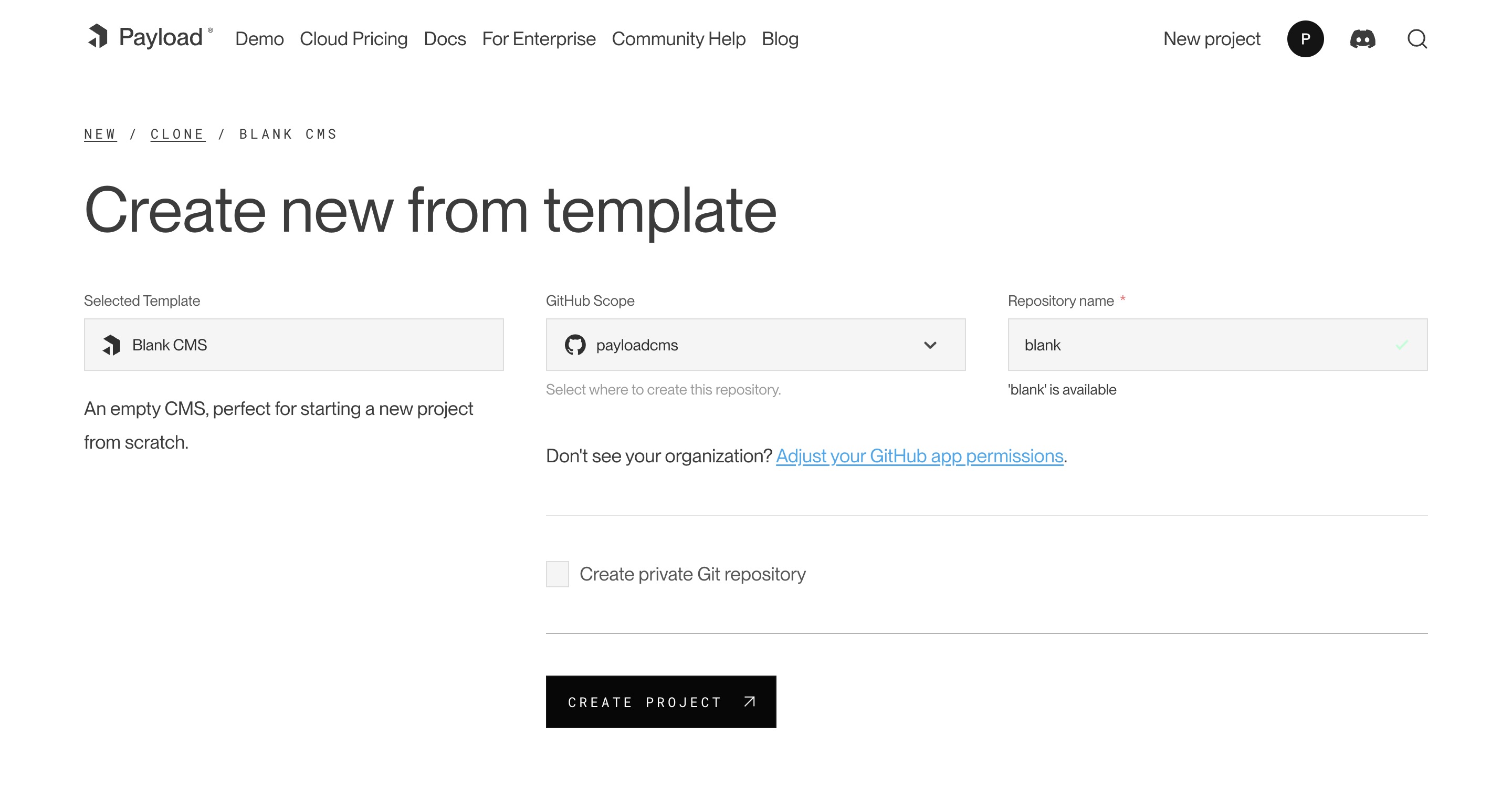

## Starting from a Template

|

||||

|

||||

Templates come preconfigured and provide a one-click solution to quickly deploy a new application.

|

||||

|

||||

|

||||

_Creating a new project from a template._

|

||||

|

||||

After creating an account, select your desired template from the Projects page. At this point, you need to connect to authorize the Payload Cloud application with your GitHub account. Click Continue with GitHub and follow the prompts to authorize the app.

|

||||

|

||||

Next, select your `GitHub Scope`. If you belong to multiple organizations, they will show up here. If you do not see the organization you are looking for, you may need to adjust your GitHub app permissions.

|

||||

|

||||

After selecting your scope, create a unique `repository name` and select whether you want your repository to be public or private on GitHub.

|

||||

|

||||

<Banner type="warning">

|

||||

**Note:** Public repositories can be accessed by anyone online, while private

|

||||

repositories grant access only to you and anyone you explicitly authorize.

|

||||

</Banner>

|

||||

|

||||

Once you are ready, click **Create Project**. This will clone the selected template to a new repository in your GitHub account, and take you to the configuration page to set up your project for deployment.

|

||||

|

||||

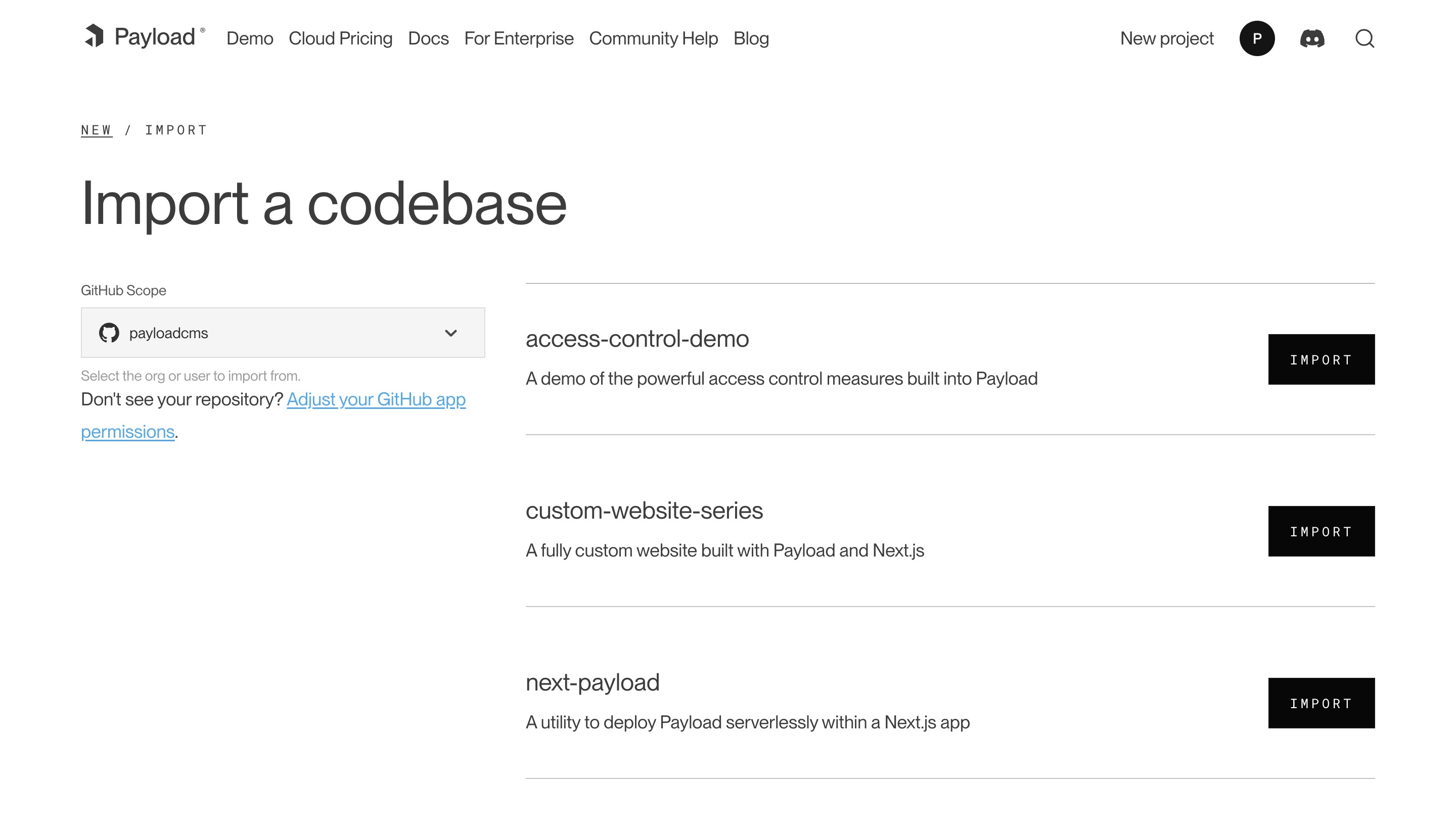

## Importing from an Existing Codebase

|

||||

|

||||

Payload Cloud works for any Node.js + MongoDB app. From the New Project page, select **import an existing Git codebase**. Choose the organization and select the repository you want to import. From here, you will be taken to the configuration page to set up your project for deployment.

|

||||

|

||||

|

||||

_Creating a new project from an existing repository._

|

||||

|

||||

<Banner type="warning">

|

||||

**Note:** In order to make use of the features of Payload Cloud in your own

|

||||

codebase, you will need to add the [Cloud

|

||||

Plugin](https://github.com/payloadcms/payload/tree/main/packages/payload-cloud)

|

||||

to your Payload app.

|

||||

</Banner>

|

||||

137

docs/cloud/projects.mdx

Normal file

137

docs/cloud/projects.mdx

Normal file

@@ -0,0 +1,137 @@

|

||||

---

|

||||

title: Cloud Projects

|

||||

label: Projects

|

||||

order: 40

|

||||

desc: Manage your Payload Cloud projects.

|

||||

keywords: cloud, payload cloud, projects, project, overview, database, file storage, build settings, environment variables, custom domains, email, developing locally

|

||||

---

|

||||

|

||||

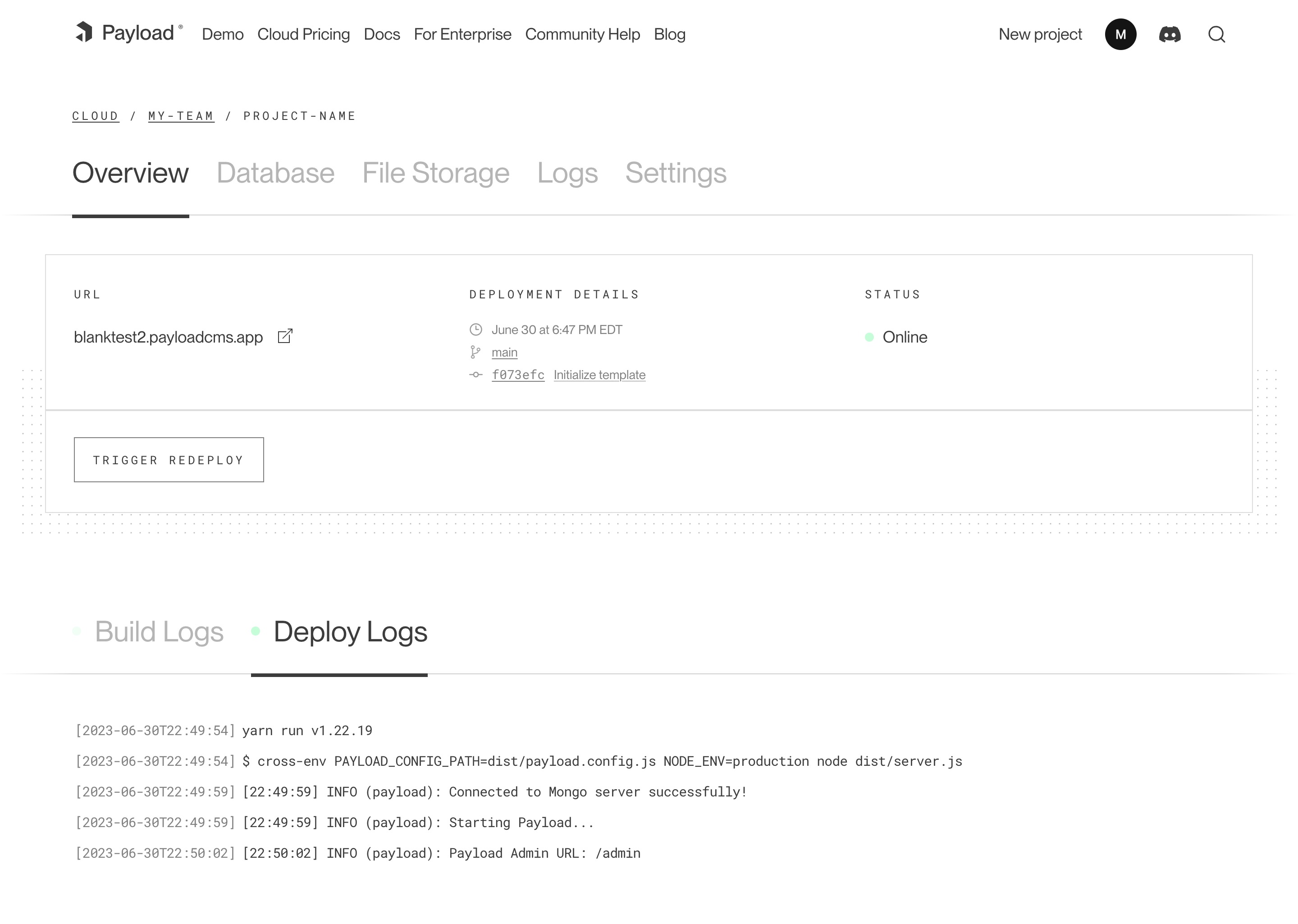

## Overview

|

||||

|

||||

<Banner>

|

||||

The overview tab shows your most recent deployment, along with build and

|

||||

deployment logs. From here, you can see your live URL, deployment details like

|

||||

timestamps and commit hash, as well as the status of your deployment. You can

|

||||

also trigger a redeployment manually, which will rebuild your project using

|

||||

the current configuration.

|

||||

</Banner>

|

||||

|

||||

|

||||

_A screenshot of the Overview page for a Cloud project._

|

||||

|

||||

## Database

|

||||

|

||||

Your Payload Cloud project comes with a MongoDB serverless Atlas DB instance or a Dedicated Atlas cluster, depending on your plan. To interact with your cloud database, you will be provided with a MongoDB connection string. This can be found under the **Database** tab of your project.

|

||||

|

||||

`mongodb+srv://your_connection_string`

|

||||

|

||||

## File Storage

|

||||

|

||||

Payload Cloud gives you S3 file storage backed by Cloudflare as a CDN, and this plugin extends Payload so that all of your media will be stored in S3 rather than locally.

|

||||

|

||||

AWS Cognito is used for authentication to your S3 bucket. The [Payload Cloud Plugin](https://github.com/payloadcms/payload/tree/main/packages/payload-cloud) will automatically pick up these values. These values are only if you'd like to access your files directly, outside of Payload Cloud.

|

||||

|

||||

### Accessing Files Outside of Payload Cloud

|

||||

|

||||

If you'd like to access your files outside of Payload Cloud, you'll need to retrieve some values from your project's settings and put them into your environment variables. In Payload Cloud, navigate to the File Storage tab and copy the values using the copy button. Put these values in your .env file. Also copy the Cognito Password value separately and put into your .env file as well.

|

||||

|

||||

When you are done, you should have the following values in your .env file:

|

||||

|

||||

```env

|

||||

PAYLOAD_CLOUD=true

|

||||

PAYLOAD_CLOUD_ENVIRONMENT=prod

|

||||

PAYLOAD_CLOUD_COGNITO_USER_POOL_CLIENT_ID=

|

||||

PAYLOAD_CLOUD_COGNITO_USER_POOL_ID=

|

||||

PAYLOAD_CLOUD_COGNITO_IDENTITY_POOL_ID=

|

||||

PAYLOAD_CLOUD_PROJECT_ID=

|

||||

PAYLOAD_CLOUD_BUCKET=

|

||||

PAYLOAD_CLOUD_BUCKET_REGION=

|

||||

PAYLOAD_CLOUD_COGNITO_PASSWORD=

|

||||

```

|

||||

|

||||

The plugin will pick up these values and use them to access your files.

|

||||

|

||||

## Build Settings

|

||||

|

||||

You can update settings from your Project’s Settings tab. Changes to your build settings will trigger a redeployment of your project.

|

||||

|

||||

## Environment Variables

|

||||

|

||||

From the Environment Variables page of the Settings tab, you can add, update and delete variables for use in your project. Like build settings, these changes will trigger a redeployment of your project.

|

||||

|

||||

<Banner>

|

||||

Note: For security reasons, any variables you wish to provide to the [Admin

|

||||

Panel](../admin/overview) must be prefixed with `NEXT_PUBLIC_`. [More

|

||||

details](../configuration/environment-vars).

|

||||

</Banner>

|

||||

|

||||

## Custom Domains

|

||||

|

||||

With Payload Cloud, you can add custom domain names to your project. To do so, first go to the Domains page of the Settings tab of your project. Here you can see your default domain. To add a new domain, type in the domain name you wish to use.

|

||||

|

||||

<Banner>

|

||||

Note: do not include the protocol (http:// or https://) or any paths (/page).

|

||||

Only include the domain name and extension, and optionally a subdomain. -

|

||||

your-domain.com - backend.your-domain.com

|

||||

</Banner>

|

||||

|

||||

Once you click save, a DNS record will be generated for your domain name to point to your live project. Add this record into your DNS provider’s records, and once the records are resolving properly (this can take 1hr to 48hrs in some cases), your domain will now to point to your live project.

|

||||

|

||||

You will also need to configure your Payload project to use your specified domain. In your `payload.config.ts` file, specify your `serverURL` with your domain:

|

||||

|

||||

```ts

|

||||

export default buildConfig({

|

||||

serverURL: 'https://example.com',

|

||||

// the rest of your config,

|

||||

})

|

||||

```

|

||||

|

||||

## Email

|

||||

|

||||

Powered by [Resend](https://resend.com), Payload Cloud comes with integrated email support out of the box. No configuration is needed, and you can use `payload.sendEmail()` to send email right from your Payload app. To learn more about sending email with Payload, checkout the [Email Configuration](../email/overview) overview.

|

||||

|

||||

If you are on the Pro or Enterprise plan, you can add your own custom Email domain name. From the Email page of your project’s Settings, add the domain you wish to use for email delivery. This will generate a set of DNS records. Add these records to your DNS provider and click verify to check that your records are resolving properly. Once verified, your emails will now be sent from your custom domain name.

|

||||

|

||||

## Developing Locally

|

||||

|

||||

To make changes to your project, you will need to clone the repository defined in your project settings to your local machine. In order to run your project locally, you will need configure your local environment first. Refer to your repository’s `README.md` file to see the steps needed for your specific template.

|

||||

|

||||

From there, you are ready to make updates to your project. When you are ready to make your changes live, commit your changes to the branch you specified in your Project settings, and your application will automatically trigger a redeploy and build from your latest commit.

|

||||

|

||||

## Cloud Plugin

|

||||

|

||||

Projects generated from a template will come pre-configured with the official Cloud Plugin, but if you are using your own repository you will need to add this into your project. To do so, add the plugin to your Payload Config:

|

||||

|

||||

`pnpm add @payloadcms/payload-cloud`

|

||||

|

||||

```js

|

||||

import { payloadCloudPlugin } from '@payloadcms/payload-cloud'

|

||||

import { buildConfig } from 'payload'

|

||||

|

||||

export default buildConfig({

|

||||

plugins: [payloadCloudPlugin()],

|

||||

// rest of config

|

||||

})

|

||||

```

|

||||

|

||||

<Banner type="warning">

|

||||

**Note:** If your Payload Config already has an email with transport, this

|

||||

will take precedence over Payload Cloud's email service.

|

||||

</Banner>

|

||||

|

||||

<Banner type="info">

|

||||

Good to know: the Payload Cloud Plugin was previously named

|

||||

`@payloadcms/plugin-cloud`. If you are using this plugin, you should update to

|

||||

the new package name.

|

||||

</Banner>

|

||||

|

||||

#### **Optional configuration**

|

||||

|

||||

If you wish to opt-out of any Payload cloud features, the plugin also accepts options to do so.

|

||||

|

||||

```js

|

||||

payloadCloud({

|

||||

storage: false, // Disable file storage

|

||||

email: false, // Disable email delivery

|

||||

})

|

||||

```

|

||||

35

docs/cloud/teams.mdx

Normal file

35

docs/cloud/teams.mdx

Normal file

@@ -0,0 +1,35 @@

|

||||

---

|

||||

title: Cloud Teams

|

||||

label: Teams

|

||||

order: 30

|

||||

desc: Manage your Payload Cloud team and billing settings.

|

||||

keywords: team, teams, billing, subscription, payment, plan, plans, cloud, payload cloud

|

||||

---

|

||||

|

||||

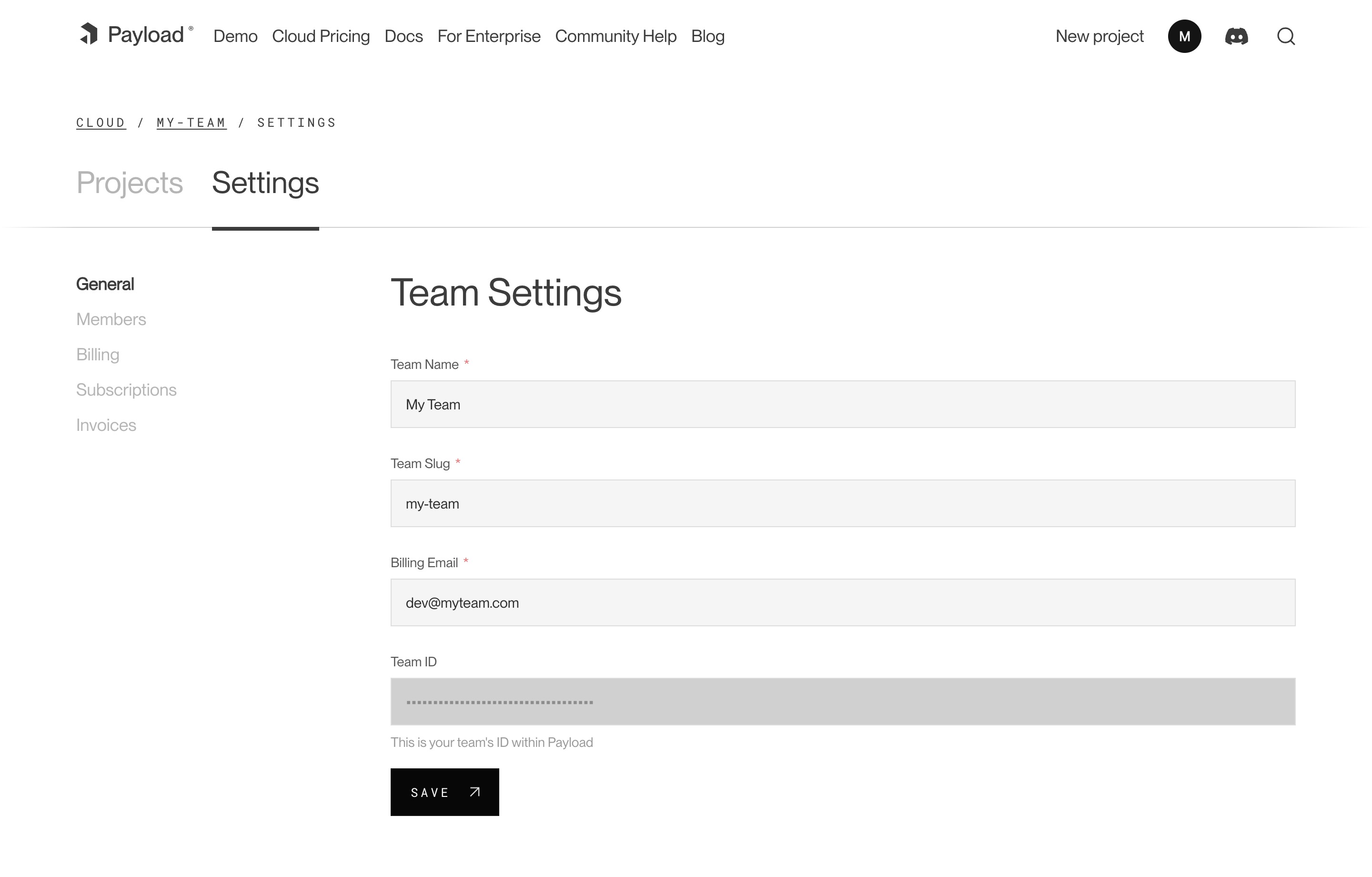

<Banner>

|

||||

Within Payload Cloud, the team management feature offers you the ability to

|

||||

manage your organization, team members, billing, and subscription settings.

|

||||

</Banner>

|

||||

|

||||

|

||||

_A screenshot of the Team Settings page._

|

||||

|

||||

## Members

|

||||

|

||||

Each team has members that can interact with your projects. You can invite multiple people to your team and each individual can belong to more than one team. You can assign them either `owner` or `user` permissions. Owners are able to make admin-only changes, such as deleting projects, and editing billing information.

|

||||

|

||||

## Adding Members

|

||||

|

||||

To add a new member to your team, visit your Team’s Settings page, and click “Invite Teammate”. You can then add their email address, and assign their role. Press “Save” to send the invitations, which will send an email to the invited team member where they can create a new account.

|

||||

|

||||

## Billing

|

||||

|

||||

Users can update billing settings and subscriptions for any teams where they are designated as an `owner`. To make updates to the team’s payment methods, visit the Billing page under the Team Settings tab. You can add new cards, delete cards, and set a payment method as a default. The default payment method will be used in the event that another payment method fails.

|

||||

|

||||

## Subscriptions

|

||||

|

||||

From the Subscriptions page, a team owner can see all current plans for their team. From here, you can see the price of each plan, if there is an active trial, and when you will be billed next.

|

||||

|

||||

## Invoices

|

||||

|

||||

The Invoices page will you show you the invoices for your account, as well as the status on their payment.

|

||||

@@ -61,7 +61,7 @@ export const Posts: CollectionConfig = {

|

||||

The following options are available:

|

||||

|

||||

| Option | Description |

|

||||

| -------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| -------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| `admin` | The configuration options for the Admin Panel. [More details](#admin-options). |

|

||||

| `access` | Provide Access Control functions to define exactly who should be able to do what with Documents in this Collection. [More details](../access-control/collections). |

|

||||

| `auth` | Specify options if you would like this Collection to feature authentication. [More details](../authentication/overview). |

|

||||

@@ -79,12 +79,11 @@ The following options are available:

|

||||

| `lockDocuments` | Enables or disables document locking. By default, document locking is enabled. Set to an object to configure, or set to `false` to disable locking. [More details](../admin/locked-documents). |

|

||||

| `slug` \* | Unique, URL-friendly string that will act as an identifier for this Collection. |

|

||||

| `timestamps` | Set to false to disable documents' automatically generated `createdAt` and `updatedAt` timestamps. |

|

||||

| `trash` | A boolean to enable soft deletes for this collection. Defaults to `false`. [More details](../trash/overview). |

|

||||

| `typescript` | An object with property `interface` as the text used in schema generation. Auto-generated from slug if not defined. |

|

||||

| `upload` | Specify options if you would like this Collection to support file uploads. For more, consult the [Uploads](../upload/overview) documentation. |

|

||||

| `versions` | Set to true to enable default options, or configure with object properties. [More details](../versions/overview#collection-config). |

|

||||

| `defaultPopulate` | Specify which fields to select when this Collection is populated from another document. [More Details](../queries/select#defaultpopulate-collection-config-property). |

|

||||

| `indexes` | Define compound indexes for this collection. This can be used to either speed up querying/sorting by 2 or more fields at the same time or to ensure uniqueness between several fields. |

|

||||

| `indexes` | Define compound indexes for this collection. This can be used to either speed up querying/sorting by 2 or more fields at the same time or to ensure uniqueness between several fields. [More details](../database/indexes#compound-indexes). |

|

||||

| `forceSelect` | Specify which fields should be selected always, regardless of the `select` query which can be useful that the field exists for access control / hooks |

|

||||

| `disableBulkEdit` | Disable the bulk edit operation for the collection in the admin panel and the REST API |

|

||||

|

||||

@@ -131,7 +130,6 @@ The following options are available:

|

||||

| `description` | Text to display below the Collection label in the List View to give editors more information. Alternatively, you can use the `admin.components.Description` to render a React component. [More details](#custom-components). |

|

||||

| `defaultColumns` | Array of field names that correspond to which columns to show by default in this Collection's List View. |

|

||||

| `disableCopyToLocale` | Disables the "Copy to Locale" button while editing documents within this Collection. Only applicable when localization is enabled. |

|

||||

| `groupBy` | Beta. Enable grouping by a field in the list view. |

|

||||

| `hideAPIURL` | Hides the "API URL" meta field while editing documents within this Collection. |

|

||||

| `enableRichTextLink` | The [Rich Text](../fields/rich-text) field features a `Link` element which allows for users to automatically reference related documents within their rich text. Set to `true` by default. |

|

||||

| `enableRichTextRelationship` | The [Rich Text](../fields/rich-text) field features a `Relationship` element which allows for users to automatically reference related documents within their rich text. Set to `true` by default. |

|

||||

@@ -141,8 +139,8 @@ The following options are available:

|

||||

| `livePreview` | Enable real-time editing for instant visual feedback of your front-end application. [More details](../live-preview/overview). |

|

||||

| `components` | Swap in your own React components to be used within this Collection. [More details](#custom-components). |

|

||||

| `listSearchableFields` | Specify which fields should be searched in the List search view. [More details](#list-searchable-fields). |

|

||||

| `pagination` | Set pagination-specific options for this Collection in the List View. [More details](#pagination). |

|

||||

| `baseFilter` | Defines a default base filter which will be applied to the List View (along with any other filters applied by the user) and internal links in Lexical Editor, |

|

||||

| `pagination` | Set pagination-specific options for this Collection. [More details](#pagination). |

|

||||

| `baseListFilter` | You can define a default base filter for this collection's List view, which will be merged into any filters that the user performs. |

|

||||

|

||||

<Banner type="warning">

|

||||

**Note:** If you set `useAsTitle` to a relationship or join field, it will use

|

||||

|

||||

@@ -158,7 +158,7 @@ export function MyCustomView(props: AdminViewServerProps) {

|

||||

|

||||

<Banner type="success">

|

||||

**Tip:** For consistent layout and navigation, you may want to wrap your

|

||||

Custom View with one of the built-in [Templates](./overview#templates).

|

||||

Custom View with one of the built-in [Template](./overview#templates).

|

||||

</Banner>

|

||||

|

||||

### View Templates

|

||||

|

||||

@@ -293,6 +293,7 @@ Here's an example of a custom `editMenuItems` component:

|

||||

|

||||

```tsx

|

||||

import React from 'react'

|

||||

import { PopupList } from '@payloadcms/ui'

|

||||

|

||||

import type { EditMenuItemsServerProps } from 'payload'

|

||||

|

||||

@@ -300,12 +301,12 @@ export const EditMenuItems = async (props: EditMenuItemsServerProps) => {

|

||||

const href = `/custom-action?id=${props.id}`

|

||||

|

||||

return (

|

||||

<>

|

||||

<a href={href}>Custom Edit Menu Item</a>

|

||||

<a href={href}>

|

||||

<PopupList.ButtonGroup>

|

||||

<PopupList.Button href={href}>Custom Edit Menu Item</PopupList.Button>

|

||||

<PopupList.Button href={href}>

|

||||

Another Custom Edit Menu Item - add as many as you need!

|

||||

</a>

|

||||

</>

|

||||

</PopupList.Button>

|

||||

</PopupList.ButtonGroup>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

@@ -63,22 +63,3 @@ export const MyCollection: CollectionConfig = {

|

||||

],

|

||||

}

|

||||

```

|

||||

## Localized fields and MongoDB indexes

|

||||

|

||||

When you set `index: true` or `unique: true` on a localized field, MongoDB creates one index **per locale path** (e.g., `slug.en`, `slug.da-dk`, etc.). With many locales and indexed fields, this can quickly approach MongoDB's per-collection index limit.

|

||||

|

||||

If you know you'll query specifically by a locale, index only those locale paths using the collection-level `indexes` option instead of setting `index: true` on the localized field. This approach gives you more control and helps avoid unnecessary indexes.

|

||||

|

||||

```ts

|

||||

import type { CollectionConfig } from 'payload'

|

||||

|

||||

export const Pages: CollectionConfig = {

|

||||

fields: [{ name: 'slug', type: 'text', localized: true }],

|

||||

indexes: [

|

||||

// Index English slug only (rather than all locales)

|

||||

{ fields: ['slug.en'] },

|

||||

// You could also make it unique:

|

||||

// { fields: ['slug.en'], unique: true },

|

||||

],

|

||||

}

|

||||

```

|

||||

|

||||

@@ -31,7 +31,7 @@ export default buildConfig({

|

||||

## Options

|

||||

|

||||

| Option | Description |

|

||||

| ---------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

|

||||

| -------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

|

||||

| `autoPluralization` | Tell Mongoose to auto-pluralize any collection names if it encounters any singular words used as collection `slug`s. |

|

||||

| `connectOptions` | Customize MongoDB connection options. Payload will connect to your MongoDB database using default options which you can override and extend to include all the [options](https://mongoosejs.com/docs/connections.html#options) available to mongoose. |

|

||||

| `collectionsSchemaOptions` | Customize Mongoose schema options for collections. |

|

||||

@@ -42,10 +42,6 @@ export default buildConfig({

|

||||

| `allowAdditionalKeys` | By default, Payload strips all additional keys from MongoDB data that don't exist in the Payload schema. If you have some data that you want to include to the result but it doesn't exist in Payload, you can set this to `true`. Be careful as Payload access control _won't_ work for this data. |

|

||||

| `allowIDOnCreate` | Set to `true` to use the `id` passed in data on the create API operations without using a custom ID field. |

|

||||

| `disableFallbackSort` | Set to `true` to disable the adapter adding a fallback sort when sorting by non-unique fields, this can affect performance in some cases but it ensures a consistent order of results. |

|

||||

| `useAlternativeDropDatabase` | Set to `true` to use an alternative `dropDatabase` implementation that calls `collection.deleteMany({})` on every collection instead of sending a raw `dropDatabase` command. Payload only uses `dropDatabase` for testing purposes. Defaults to `false`. |

|

||||

| `useBigIntForNumberIDs` | Set to `true` to use `BigInt` for custom ID fields of type `'number'`. Useful for databases that don't support `double` or `int32` IDs. Defaults to `false`. |

|

||||

| `useJoinAggregations` | Set to `false` to disable join aggregations (which use correlated subqueries) and instead populate join fields via multiple `find` queries. Defaults to `true`. |

|

||||

| `usePipelineInSortLookup` | Set to `false` to disable the use of `pipeline` in the `$lookup` aggregation in sorting. Defaults to `true`. |

|

||||

|

||||

## Access to Mongoose models

|

||||

|

||||

@@ -60,21 +56,9 @@ You can access Mongoose models as follows:

|

||||

|

||||

## Using other MongoDB implementations

|

||||

|

||||

You can import the `compatibilityOptions` object to get the recommended settings for other MongoDB implementations. Since these databases aren't officially supported by payload, you may still encounter issues even with these settings (please create an issue or PR if you believe these options should be updated):

|

||||

Limitations with [DocumentDB](https://aws.amazon.com/documentdb/) and [Azure Cosmos DB](https://azure.microsoft.com/en-us/products/cosmos-db):

|

||||

|

||||

```ts

|

||||

import { mongooseAdapter, compatibilityOptions } from '@payloadcms/db-mongodb'

|

||||

|

||||

export default buildConfig({

|

||||

db: mongooseAdapter({

|

||||

url: process.env.DATABASE_URI,

|

||||

// For example, if you're using firestore:

|

||||

...compatibilityOptions.firestore,

|

||||

}),

|

||||

})

|

||||

```

|

||||

|

||||

We export compatibility options for [DocumentDB](https://aws.amazon.com/documentdb/), [Azure Cosmos DB](https://azure.microsoft.com/en-us/products/cosmos-db) and [Firestore](https://cloud.google.com/firestore/mongodb-compatibility/docs/overview). Known limitations:

|

||||

|

||||

- Azure Cosmos DB does not support transactions that update two or more documents in different collections, which is a common case when using Payload (via hooks).

|

||||

- Azure Cosmos DB the root config property `indexSortableFields` must be set to `true`.

|

||||

- For Azure Cosmos DB you must pass `transactionOptions: false` to the adapter options. Azure Cosmos DB does not support transactions that update two and more documents in different collections, which is a common case when using Payload (via hooks).

|

||||

- For Azure Cosmos DB the root config property `indexSortableFields` must be set to `true`.

|

||||

- The [Join Field](../fields/join) is not supported in DocumentDB and Azure Cosmos DB, as we internally use MongoDB aggregations to query data for that field, which are limited there. This can be changed in the future.

|

||||

- For DocumentDB pass `disableIndexHints: true` to disable hinting to the DB to use `id` as index which can cause problems with DocumentDB.

|

||||

|

||||

@@ -296,16 +296,11 @@ query {

|

||||

sort: "createdAt"

|

||||

limit: 5

|

||||

where: { author: { equals: "66e3431a3f23e684075aaeb9" } }

|

||||

"""

|

||||

Optionally pass count: true if you want to retrieve totalDocs

|

||||

"""

|

||||

count: true -- s

|

||||

) {

|

||||

docs {

|

||||

title

|

||||

}

|

||||

hasNextPage

|

||||

totalDocs

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -157,7 +157,6 @@ The following field names are forbidden and cannot be used:

|

||||

- `salt`

|

||||

- `hash`

|

||||

- `file`

|

||||

- `status` - with Postgres Adapter and when drafts are enabled

|

||||

|

||||

### Field-level Hooks

|

||||

|

||||

|

||||

@@ -81,7 +81,7 @@ To install a Database Adapter, you can run **one** of the following commands:

|

||||

|

||||

#### 2. Copy Payload files into your Next.js app folder

|

||||

|

||||

Payload installs directly in your Next.js `/app` folder, and you'll need to place some files into that folder for Payload to run. You can copy these files from the [Blank Template](https://github.com/payloadcms/payload/tree/main/templates/blank/src/app/%28payload%29) on GitHub. Once you have the required Payload files in place in your `/app` folder, you should have something like this:

|

||||

Payload installs directly in your Next.js `/app` folder, and you'll need to place some files into that folder for Payload to run. You can copy these files from the [Blank Template](<https://github.com/payloadcms/payload/tree/main/templates/blank/src/app/(payload)>) on GitHub. Once you have the required Payload files in place in your `/app` folder, you should have something like this:

|

||||

|

||||

```plaintext

|

||||

app/

|

||||

|

||||

@@ -34,20 +34,20 @@ npm i @payloadcms/plugin-csm

|

||||

Then in the `plugins` array of your Payload Config, call the plugin and enable any collections that require Content Source Maps.

|

||||

|

||||

```ts

|

||||

import { buildConfig } from 'payload/config'

|

||||

import contentSourceMaps from '@payloadcms/plugin-csm'

|

||||

import { buildConfig } from "payload/config"

|

||||

import contentSourceMaps from "@payloadcms/plugin-csm"

|

||||

|

||||

const config = buildConfig({

|

||||

collections: [

|

||||

{

|

||||

slug: 'pages',

|

||||

slug: "pages",

|

||||

fields: [

|

||||

{

|

||||

name: 'slug',

|

||||

type: 'text',

|

||||

},

|

||||

{

|

||||

name: 'title',

|

||||

name: 'title,'

|

||||

type: 'text',

|

||||

},

|

||||

],

|

||||

@@ -55,7 +55,7 @@ const config = buildConfig({

|

||||

],

|

||||

plugins: [

|

||||

contentSourceMaps({

|

||||

collections: ['pages'],

|

||||

collections: ["pages"],

|

||||

}),

|

||||

],

|

||||

})

|

||||

|

||||

@@ -51,7 +51,7 @@ export default buildConfig({

|

||||

// add as many cron jobs as you want

|

||||

],

|

||||

shouldAutoRun: async (payload) => {

|

||||

// Tell Payload if it should run jobs or not. This function is optional and will return true by default.

|

||||

// Tell Payload if it should run jobs or not.

|

||||

// This function will be invoked each time Payload goes to pick up and run jobs.

|

||||

// If this function ever returns false, the cron schedule will be stopped.

|

||||

return true

|

||||

|

||||

@@ -1,155 +0,0 @@

|

||||

---

|

||||

title: Job Schedules

|

||||

label: Schedules

|

||||

order: 60

|

||||

desc: Payload allows you to schedule jobs to run periodically

|

||||

keywords: jobs queue, application framework, typescript, node, react, nextjs, scheduling, cron, schedule

|

||||

---

|

||||

|

||||

Payload's `schedule` property lets you enqueue Jobs regularly according to a cron schedule - daily, weekly, hourly, or any custom interval. This is ideal for tasks or workflows that must repeat automatically and without manual intervention.

|

||||

|

||||

Scheduling Jobs differs significantly from running them:

|

||||

|

||||

- **Queueing**: Scheduling only creates (enqueues) the Job according to your cron expression. It does not immediately execute any business logic.

|

||||

- **Running**: Execution happens separately through your Jobs runner - such as autorun, or manual invocation using `payload.jobs.run()` or the `payload-jobs/run` endpoint.

|

||||

|

||||

Use the `schedule` property specifically when you have recurring tasks or workflows. To enqueue a single Job to run once in the future, use the `waitUntil` property instead.

|

||||

|

||||

## Example use cases

|

||||

|

||||

**Regular emails or notifications**

|

||||

|

||||

Send nightly digests, weekly newsletters, or hourly updates.

|

||||

|

||||

**Batch processing during off-hours**

|

||||

|

||||

Process analytics data or rebuild static sites during low-traffic times.

|

||||

|

||||

**Periodic data synchronization**

|

||||

|

||||

Regularly push or pull updates to or from external APIs.

|

||||

|

||||

## Handling schedules

|

||||

|

||||

Something needs to actually trigger the scheduling of jobs (execute the scheduling lifecycle seen below). By default, the `jobs.autorun` configuration, as well as the `/api/payload-jobs/run` will also handle scheduling for the queue specified in the `autorun` configuration.

|

||||

|

||||

You can disable this behavior by setting `disableScheduling: true` in your `autorun` configuration, or by passing `disableScheduling=true` to the `/api/payload-jobs/run` endpoint. This is useful if you want to handle scheduling manually, for example, by using a cron job or a serverless function that calls the `/api/payload-jobs/handle-schedules` endpoint or the `payload.jobs.handleSchedules()` local API method.

|

||||

|

||||

## Defining schedules on Tasks or Workflows

|

||||

|

||||

Schedules are defined using the `schedule` property:

|

||||

|

||||

```ts

|

||||

export type ScheduleConfig = {

|

||||

cron: string // required, supports seconds precision

|

||||

queue: string // required, the queue to push Jobs onto

|

||||

hooks?: {

|

||||

// Optional hooks to customize scheduling behavior

|

||||

beforeSchedule?: BeforeScheduleFn

|

||||

afterSchedule?: AfterScheduleFn

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Example schedule

|

||||

|

||||

The following example demonstrates scheduling a Job to enqueue every day at midnight:

|

||||

|

||||

```ts

|

||||

import type { TaskConfig } from 'payload'

|

||||

|

||||

export const SendDigestEmail: TaskConfig<'SendDigestEmail'> = {

|

||||

slug: 'SendDigestEmail',

|

||||

schedule: [

|

||||

{

|

||||

cron: '0 0 * * *', // Every day at midnight

|

||||

queue: 'nightly',

|

||||

},

|

||||

],

|

||||

handler: async () => {

|

||||

await sendDigestToAllUsers()

|

||||

},

|

||||

}

|

||||

```

|

||||

|

||||

This configuration only queues the Job - it does not execute it immediately. To actually run the queued Job, you configure autorun in your Payload config (note that autorun should **not** be used on serverless platforms):

|

||||

|

||||

```ts

|

||||

export default buildConfig({

|

||||

jobs: {

|

||||

autoRun: [

|

||||

{

|

||||

cron: '* * * * *', // Runs every minute

|

||||

queue: 'nightly',

|

||||

},

|

||||

],

|

||||

tasks: [SendDigestEmail],

|

||||

},

|

||||

})

|

||||

```

|

||||

|

||||

That way, Payload's scheduler will automatically enqueue the job into the `nightly` queue every day at midnight. The autorun configuration will check the `nightly` queue every minute and execute any Jobs that are due to run.

|

||||

|

||||

## Scheduling lifecycle

|

||||

|

||||

Here's how the scheduling process operates in detail:

|

||||

|

||||

1. **Cron evaluation**: Payload (or your external trigger in `manual` mode) identifies which schedules are due to run. To do that, it will

|

||||

read the `payload-jobs-stats` global which contains information about the last time each scheduled task or workflow was run.

|

||||

2. **BeforeSchedule hook**:

|

||||

- The default beforeSchedule hook checks how many active or runnable jobs of the same type that have been queued by the scheduling system currently exist.

|

||||

If such a job exists, it will skip scheduling a new one.

|

||||

- You can provide your own `beforeSchedule` hook to customize this behavior. For example, you might want to allow multiple overlapping Jobs or dynamically set the Job input data.

|

||||

3. **Enqueue Job**: Payload queues up a new job. This job will have `waitUntil` set to the next scheduled time based on the cron expression.

|

||||

4. **AfterSchedule hook**:

|

||||

- The default afterSchedule hook updates the `payload-jobs-stats` global metadata with the last scheduled time for the Job.

|

||||

- You can provide your own afterSchedule hook to it for custom logging, metrics, or other post-scheduling actions.

|

||||

|

||||

## Customizing concurrency and input (Advanced)

|

||||

|

||||

You may want more control over concurrency or dynamically set Job inputs at scheduling time. For instance, allowing multiple overlapping Jobs to be scheduled, even if a previously scheduled job has not completed yet, or preparing dynamic data to pass to your Job handler:

|

||||

|

||||

```ts

|

||||

import { countRunnableOrActiveJobsForQueue } from 'payload'

|

||||

|

||||

schedule: [

|

||||

{

|

||||

cron: '* * * * *', // every minute

|

||||

queue: 'reports',

|

||||

hooks: {

|

||||

beforeSchedule: async ({ queueable, req }) => {

|

||||

const runnableOrActiveJobsForQueue =

|

||||

await countRunnableOrActiveJobsForQueue({

|

||||

queue: queueable.scheduleConfig.queue,

|

||||

req,

|

||||

taskSlug: queueable.taskConfig?.slug,

|

||||

workflowSlug: queueable.workflowConfig?.slug,

|

||||

onlyScheduled: true,

|

||||

})

|

||||

|

||||

// Allow up to 3 simultaneous scheduled jobs and set dynamic input

|

||||

return {

|

||||

shouldSchedule: runnableOrActiveJobsForQueue < 3,

|

||||

input: { text: 'Hi there' },

|

||||

}

|

||||

},

|

||||

},

|

||||

},

|

||||

]

|

||||

```

|

||||

|

||||

This allows fine-grained control over how many Jobs can run simultaneously and provides dynamically computed input values each time a Job is scheduled.

|

||||

|

||||

## Scheduling in serverless environments

|

||||

|

||||

On serverless platforms, scheduling must be triggered externally since Payload does not automatically run cron schedules in ephemeral environments. You have two main ways to trigger scheduling manually:

|

||||

|

||||

- **Invoke via Payload's API:** `payload.jobs.handleSchedules()`

|

||||

- **Use the REST API endpoint:** `/api/payload-jobs/handle-schedules`

|

||||

- **Use the run endpoint, which also handles scheduling by default:** `GET /api/payload-jobs/run`

|

||||

|

||||

For example, on Vercel, you can set up a Vercel Cron to regularly trigger scheduling:

|

||||

|

||||

- **Vercel Cron Job:** Configure Vercel Cron to periodically call `GET /api/payload-jobs/handle-schedules`. If you would like to auto-run your scheduled jobs as well, you can use the `GET /api/payload-jobs/run` endpoint.

|

||||

|

||||

Once Jobs are queued, their execution depends entirely on your configured runner setup (e.g., autorun, or manual invocation).

|

||||

@@ -45,11 +45,13 @@ The following options are available:

|

||||

|

||||

| Path | Description |

|

||||

| ----------------- | ----------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| **`url`** | String, or function that returns a string, pointing to your front-end application. This value is used as the iframe `src`. [More details](#url). |

|

||||

| **`url`** \* | String, or function that returns a string, pointing to your front-end application. This value is used as the iframe `src`. [More details](#url). |

|

||||

| **`breakpoints`** | Array of breakpoints to be used as “device sizes” in the preview window. Each item appears as an option in the toolbar. [More details](#breakpoints). |

|

||||

| **`collections`** | Array of collection slugs to enable Live Preview on. |

|

||||

| **`globals`** | Array of global slugs to enable Live Preview on. |

|

||||

|

||||

_\* An asterisk denotes that a property is required._

|

||||

|

||||

### URL

|

||||

|

||||

The `url` property resolves to a string that points to your front-end application. This value is used as the `src` attribute of the iframe rendering your front-end. Once loaded, the Admin Panel will communicate directly with your app through `window.postMessage` events.

|

||||

@@ -86,16 +88,17 @@ const config = buildConfig({

|

||||

// ...

|

||||

livePreview: {

|

||||

// highlight-start

|

||||

url: ({ data, collectionConfig, locale }) =>

|

||||

`${data.tenant.url}${

|

||||

collectionConfig.slug === 'posts'

|

||||

? `/posts/${data.slug}`

|

||||

: `${data.slug !== 'home' ? `/${data.slug}` : ''}`

|

||||

url: ({

|

||||

data,

|

||||

collectionConfig,

|

||||

locale

|

||||

}) => `${data.tenant.url}${ // Multi-tenant top-level domain

|

||||

collectionConfig.slug === 'posts' ? `/posts/${data.slug}` : `${data.slug !== 'home' : `/${data.slug}` : ''}`

|

||||

}${locale ? `?locale=${locale?.code}` : ''}`, // Localization query param

|

||||

collections: ['pages'],

|

||||

},

|

||||

// highlight-end

|

||||

},

|

||||

}

|

||||

})

|

||||

```

|

||||

|

||||

|

||||

@@ -51,7 +51,6 @@ export default async function Page() {

|

||||

collection: 'pages',

|

||||

id: '123',

|

||||

draft: true,

|

||||

trash: true, // add this if trash is enabled in your collection and want to preview trashed documents

|

||||

})

|

||||

|

||||

return (

|

||||

|

||||

@@ -162,11 +162,6 @@ const result = await payload.find({

|

||||

})

|

||||

```

|

||||

|

||||

<Banner type="info">

|

||||

`pagination`, `page`, and `limit` are three related properties [documented

|

||||

here](/docs/queries/pagination).

|

||||

</Banner>

|

||||

|

||||

### Find by ID#collection-find-by-id

|

||||

|

||||

```js

|

||||

@@ -199,27 +194,6 @@ const result = await payload.count({

|

||||

})

|

||||

```

|

||||

|

||||

### FindDistinct#collection-find-distinct

|

||||

|

||||

```js

|

||||

// Result will be an object with:

|

||||

// {

|

||||

// values: ['value-1', 'value-2'], // array of distinct values,

|

||||

// field: 'title', // the field

|

||||

// totalDocs: 10, // count of the distinct values satisfies query,

|

||||

// perPage: 10, // count of distinct values per page (based on provided limit)

|

||||

// }

|

||||

const result = await payload.findDistinct({

|

||||

collection: 'posts', // required

|

||||

locale: 'en',

|

||||

where: {}, // pass a `where` query here

|

||||

user: dummyUser,

|

||||

overrideAccess: false,

|

||||

field: 'title',

|

||||

sort: 'title',

|

||||

})

|

||||

```

|

||||

|

||||

### Update by ID#collection-update-by-id

|

||||

|

||||

```js

|

||||

|

||||

@@ -58,7 +58,7 @@ To learn more, see the [Custom Components Performance](../admin/custom-component

|

||||

|

||||

### Block references

|

||||

|

||||

Use [Block References](../fields/blocks#block-references) to share the same block across multiple fields without bloating the config. This will reduce the number of fields to traverse when processing permissions, etc. and can significantly reduce the amount of data sent from the server to the client in the Admin Panel.

|

||||

Use [Block References](../fields/blocks#block-references) to share the same block across multiple fields without bloating the config. This will reduce the number of fields to traverse when processing permissions, etc. and can can significantly reduce the amount of data sent from the server to the client in the Admin Panel.

|

||||

|

||||

For example, if you have a block that is used in multiple fields, you can define it once and reference it in each field.

|

||||

|

||||

@@ -207,7 +207,7 @@ Everything mentioned above applies to local development as well, but there are a

|

||||

### Enable Turbopack

|

||||

|

||||

<Banner type="warning">

|

||||

**Note:** In the future this will be the default. Use at your own risk.

|

||||

**Note:** In the future this will be the default. Use as your own risk.

|

||||

</Banner>

|

||||

|

||||

Add `--turbo` to your dev script to significantly speed up your local development server start time.

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

---

|

||||

title: Form Builder Plugin

|

||||

label: Form Builder

|

||||

order: 30

|

||||

order: 40

|

||||

desc: Easily build and manage forms from the Admin Panel. Send dynamic, personalized emails and even accept and process payments.

|

||||

keywords: plugins, plugin, form, forms, form builder

|

||||

---

|

||||

|

||||

@@ -1,155 +0,0 @@

|

||||

---

|

||||

title: Import Export Plugin

|

||||

label: Import Export

|

||||

order: 40

|

||||

desc: Add Import and export functionality to create CSV and JSON data exports

|

||||

keywords: plugins, plugin, import, export, csv, JSON, data, ETL, download

|

||||

---

|

||||

|

||||

|

||||

|

||||

<Banner type="warning">

|

||||

**Note**: This plugin is in **beta** as some aspects of it may change on any

|

||||

minor releases. It is under development and currently only supports exporting

|

||||

of collection data.

|

||||

</Banner>

|

||||

|

||||

This plugin adds features that give admin users the ability to download or create export data as an upload collection and import it back into a project.

|

||||

|

||||

## Core Features

|

||||

|

||||

- Export data as CSV or JSON format via the admin UI

|

||||

- Download the export directly through the browser

|

||||

- Create a file upload of the export data

|

||||

- Use the jobs queue for large exports

|

||||

- (Coming soon) Import collection data

|

||||

|

||||

## Installation

|

||||

|

||||

Install the plugin using any JavaScript package manager like [pnpm](https://pnpm.io), [npm](https://npmjs.com), or [Yarn](https://yarnpkg.com):

|

||||

|

||||

```bash

|

||||

pnpm add @payloadcms/plugin-import-export

|

||||

```

|

||||

|

||||

## Basic Usage

|

||||

|

||||

In the `plugins` array of your [Payload Config](https://payloadcms.com/docs/configuration/overview), call the plugin with [options](#options):

|

||||

|

||||

```ts

|

||||

import { buildConfig } from 'payload'

|

||||

import { importExportPlugin } from '@payloadcms/plugin-import-export'

|

||||

|

||||

const config = buildConfig({

|

||||

collections: [Pages, Media],

|

||||

plugins: [

|

||||

importExportPlugin({

|

||||

collections: ['users', 'pages'],

|

||||

// see below for a list of available options

|

||||

}),

|

||||

],

|

||||

})

|

||||

|

||||

export default config

|

||||

```

|

||||

|

||||

## Options

|

||||

|

||||

| Property | Type | Description |

|

||||

| -------------------------- | -------- | ------------------------------------------------------------------------------------------------------------------------------------ |

|